I created a multi-user AI agent that runs inside an e-commerce dashboard and can answer account-specific questions like “Where is my order?” or “Show me the invoice for order #6.” I built the agent with n8n and Supabase, and I focused heavily on security. In this article I’ll explain the full architecture, show the demo results, and walk you through seven concrete strategies I used to keep each user’s data isolated and safe.

The aim is simple: let customers self-serve while ensuring one user can never access another user’s data. Mistakes here create major breaches. Done right, this becomes a trusted self-service channel that reduces support costs.

What I built and why it matters

I built a self-service agent embedded in a WooCommerce dashboard. The agent can:

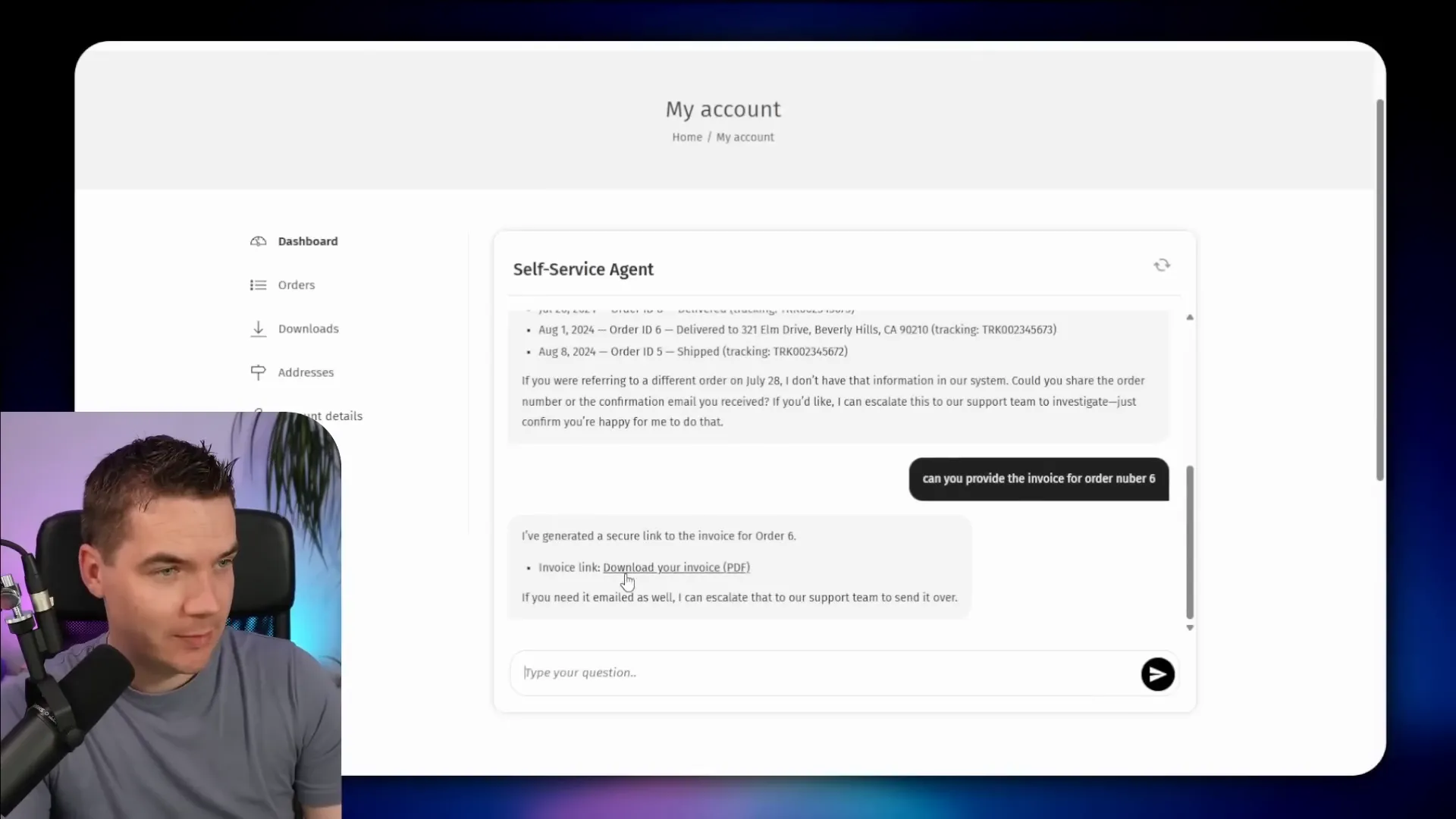

- Find orders tied to the logged-in customer.

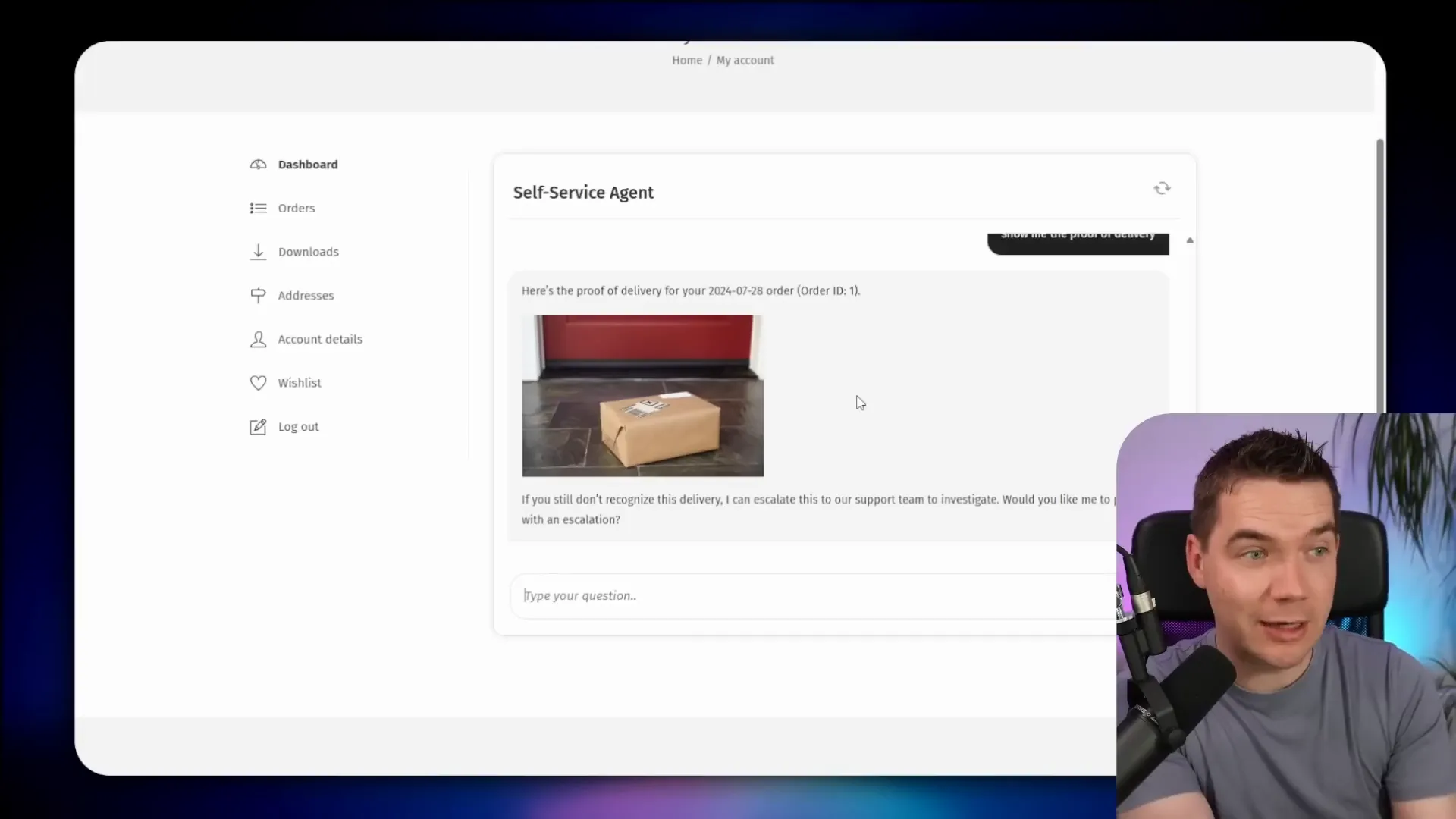

- Generate signed links to invoices and proof of delivery photos.

- Perform simple calculations like total spend over a date range.

- Escalate support issues into a shared inbox with context.

These are useful features for customers. They reduce repetitive support tasks. They also raise security demands. When the AI has access to personally identifiable information, you must be able to prove the agent only sees the right user’s data.

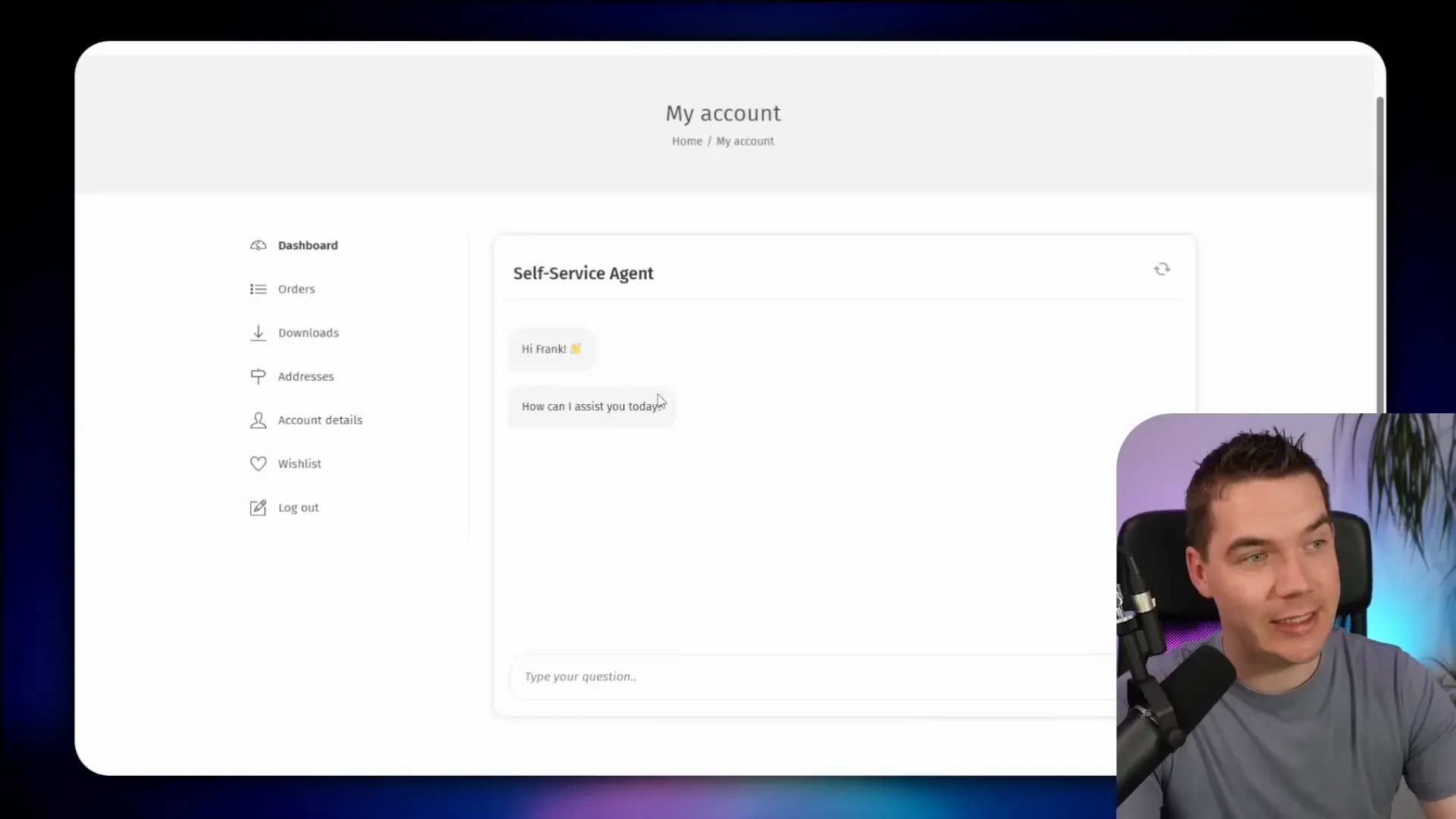

Quick demo highlights

In the demo I used test accounts to show data isolation. I logged in as one user, asked about an order placed on July 28, and the agent found the exact order. The agent offered a proof of delivery photo. I asked it to escalate the issue and it pushed a fully formed support escalation into our inbox, with order details and a link to the proof of delivery.

I then logged in as a different user and repeated the same question. The agent checked that user’s account. It returned only that user’s orders, generated a signed URL for an invoice, and used a secure signed link to serve the document. The signed URL used Supabase storage and can expire after a configurable time.

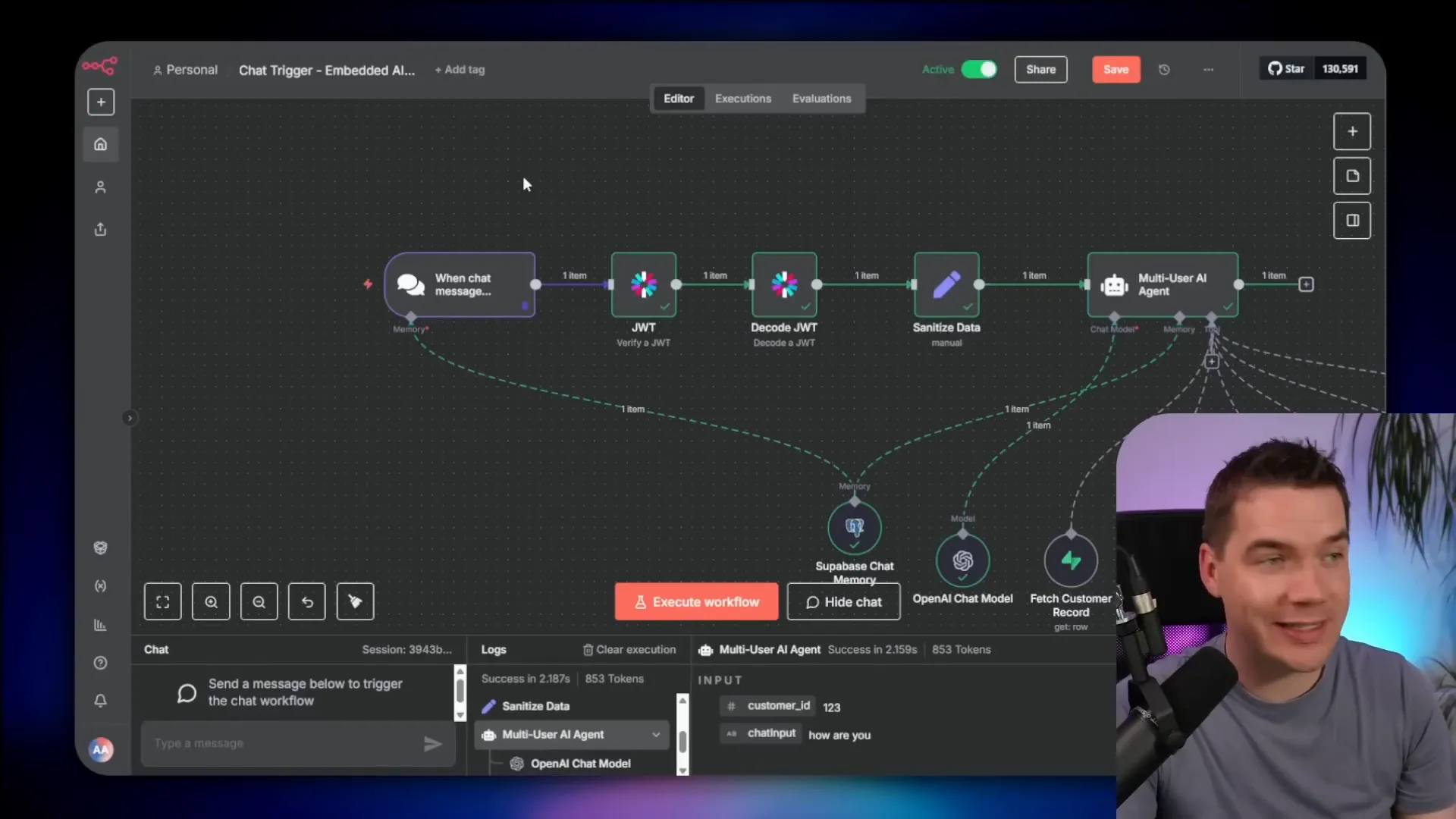

High-level architecture

The main components are:

- n8n: houses the AI agent, workflows, and chat trigger.

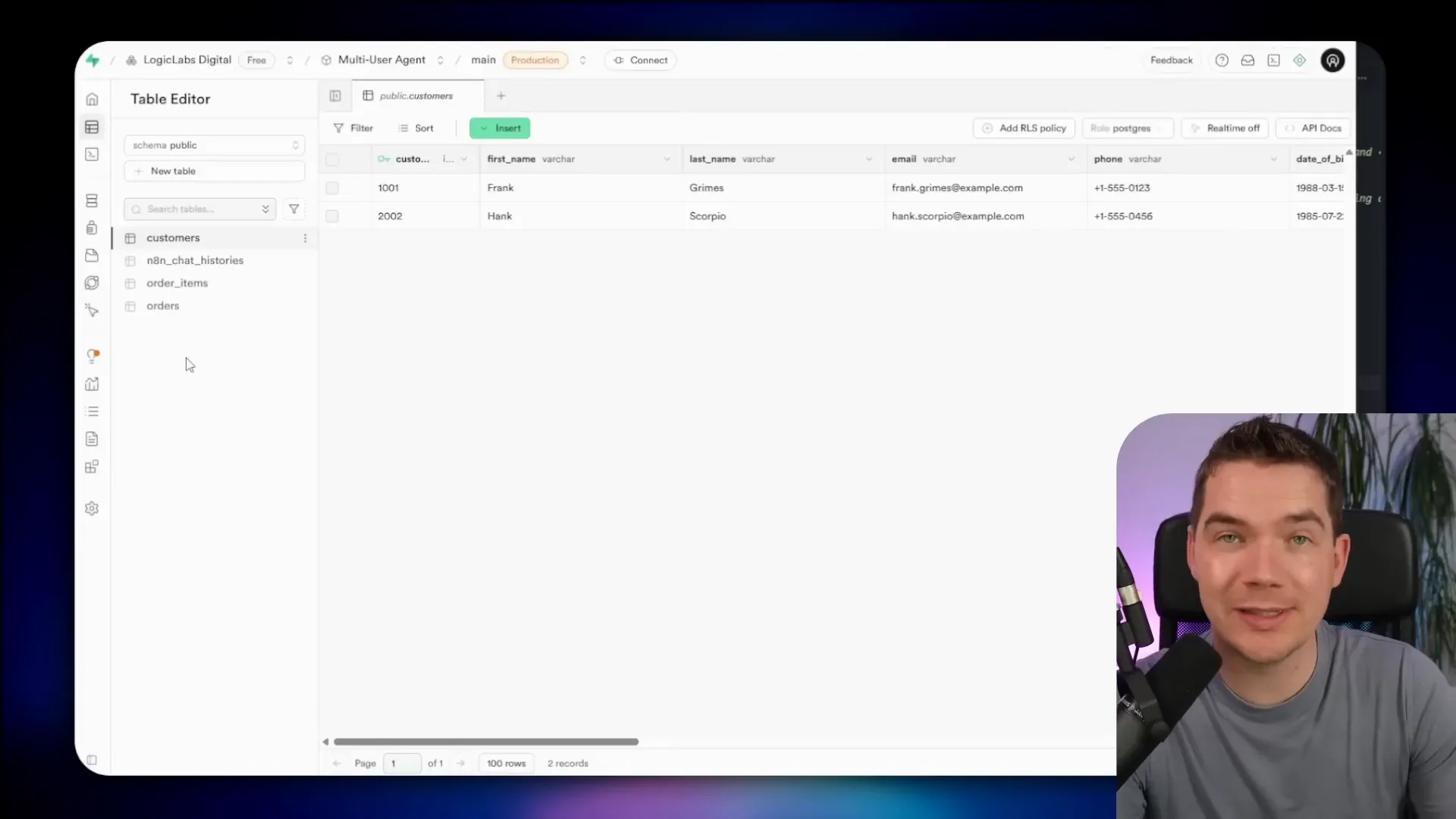

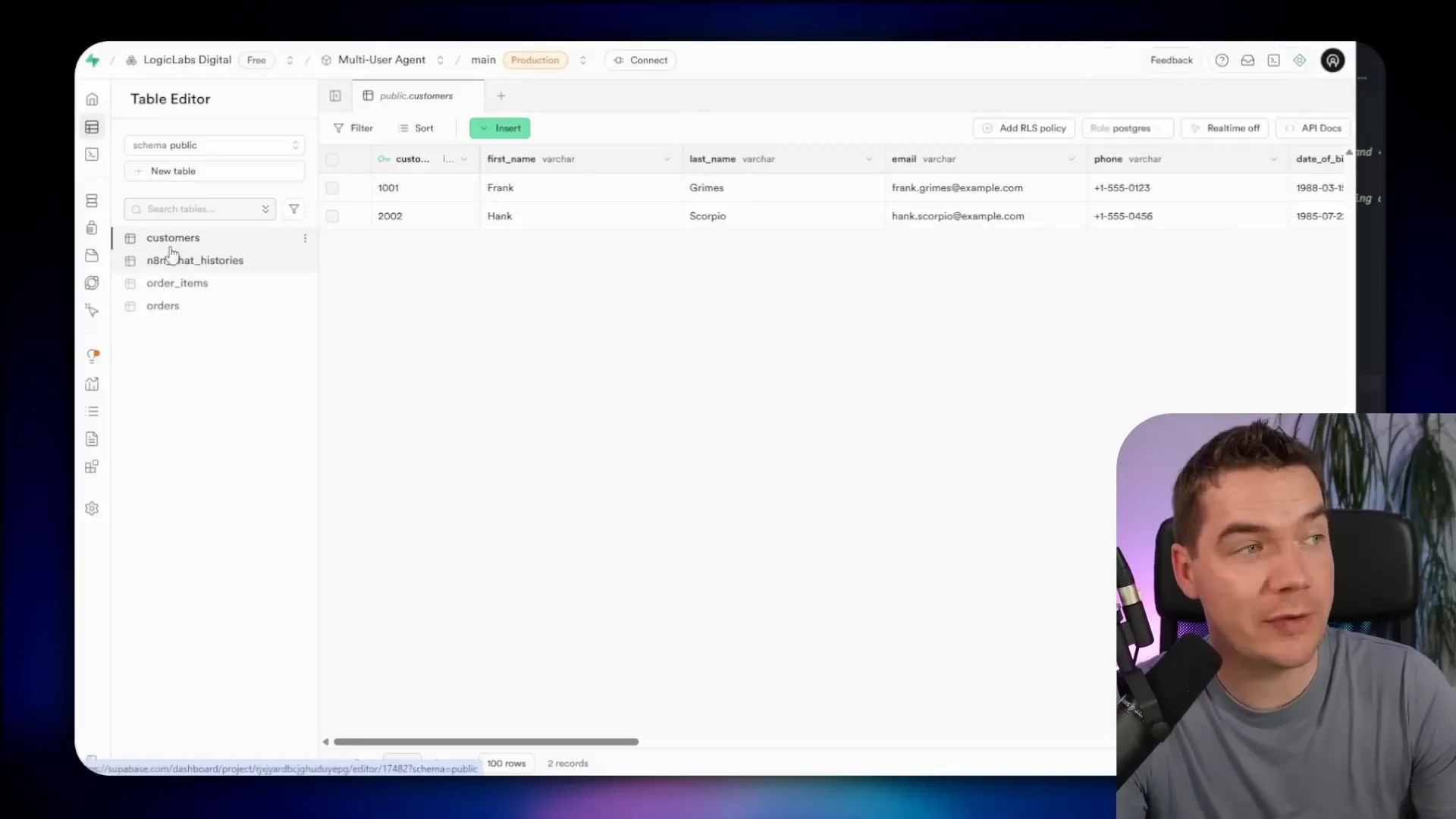

- Supabase: stores customers, orders, order items, chat histories, and documents (in storage buckets).

- WordPress: serves as the front end and an optional proxy for private chat embed.

- LLM provider: the language model (I used GPT-5 in the demo agent).

All pieces must work together with strict access controls. The tricky part is not making the agent — it’s stopping it from leaking data across users.

The difference between multi-user and multi-tenant systems

It helps to define terms because they affect security design.

- Multi-user: a single application with many individual users. A standard e-commerce store is usually multi-user. Each customer should only access their own data.

- Multi-tenant: a single app instance serves many separate organizations, each with users. Data separation here is between tenants rather than between individual users.

The strategies I explain for multi-user setups map directly to multi-tenant systems. Instead of customer IDs you use tenant IDs and add an extra layer of user management inside each tenant. The same isolation principles still apply.

Seven strategies to enforce data isolation and secure the architecture

I used a combination of application logic, infrastructure controls, and database rules. The seven strategies are:

- Hide n8n endpoints from the browser using a proxy.

- Authenticate and verify request origin with signed short-lived tokens (JWTs).

- Filter all tooling and database queries by an injected customer ID.

- Enable Row Level Security (RLS) on Supabase tables and buckets.

- Apply the principle of least privilege for all service credentials.

- Use step-up (multi-factor) authentication for sensitive data access.

- Enforce database-level access tokens and RLS policies where possible (zero trust).

I’ll walk through each strategy in detail, show where the risks are, and describe how I implemented the defense.

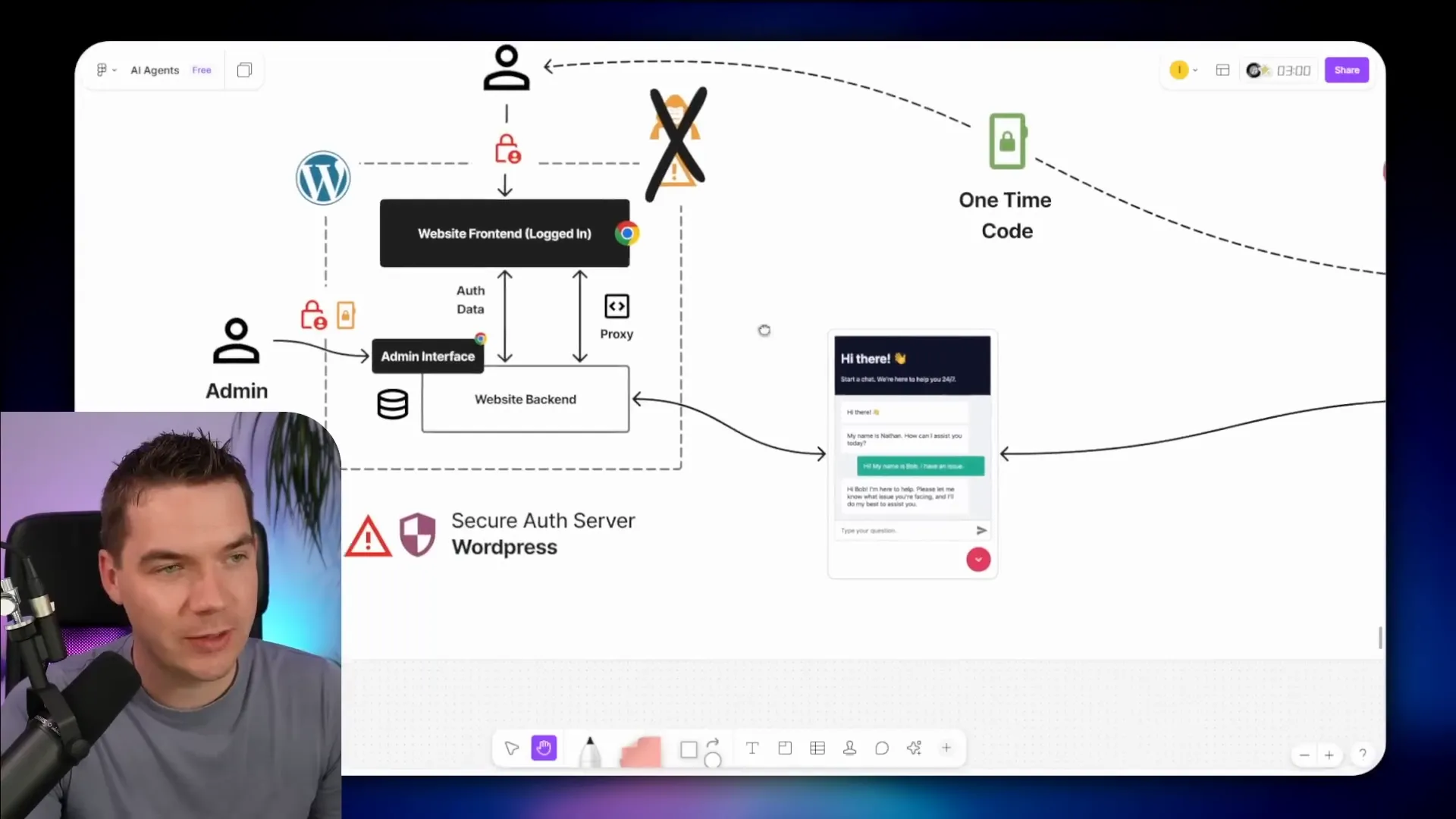

1. Hide n8n endpoints with a secure proxy

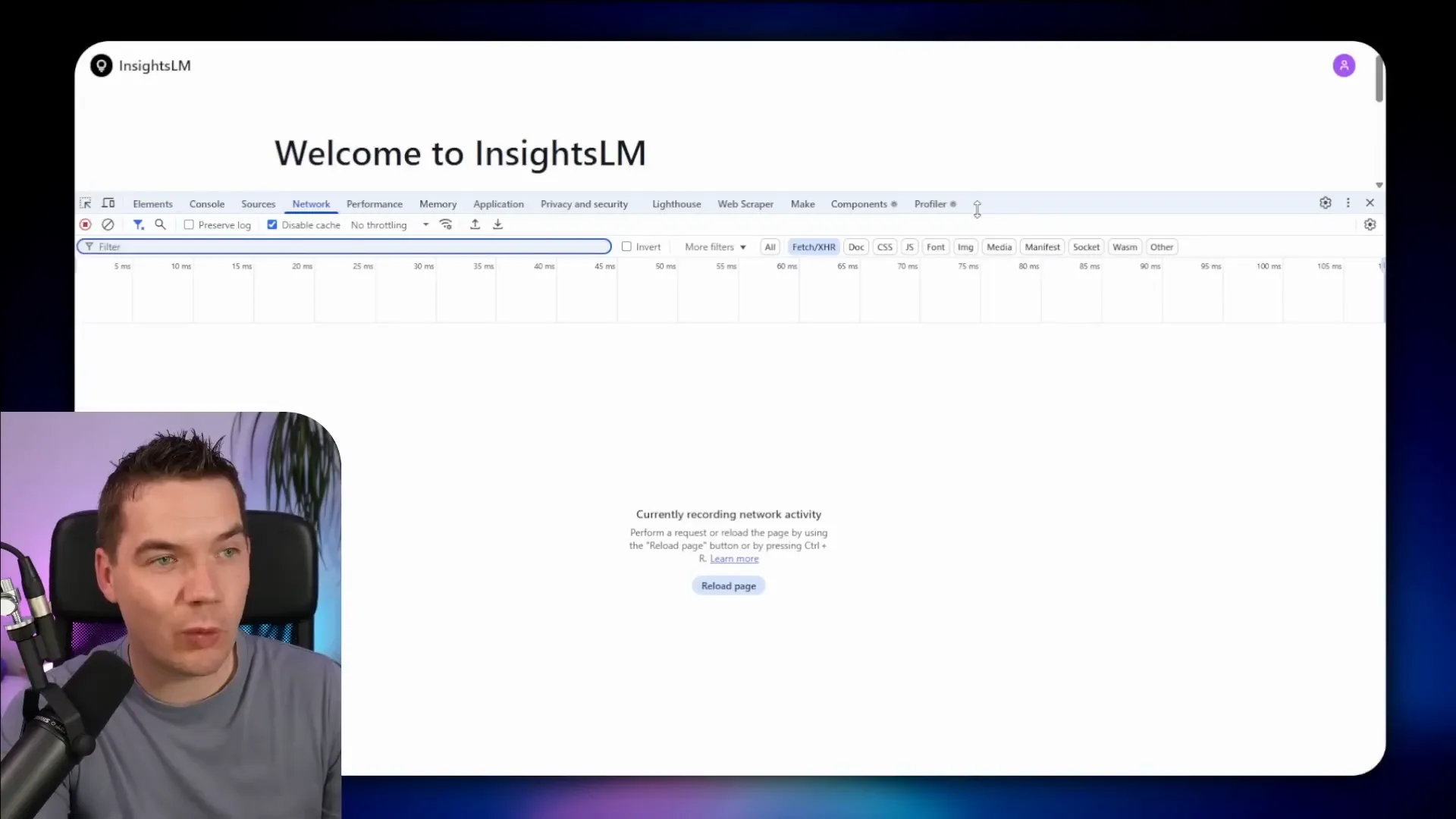

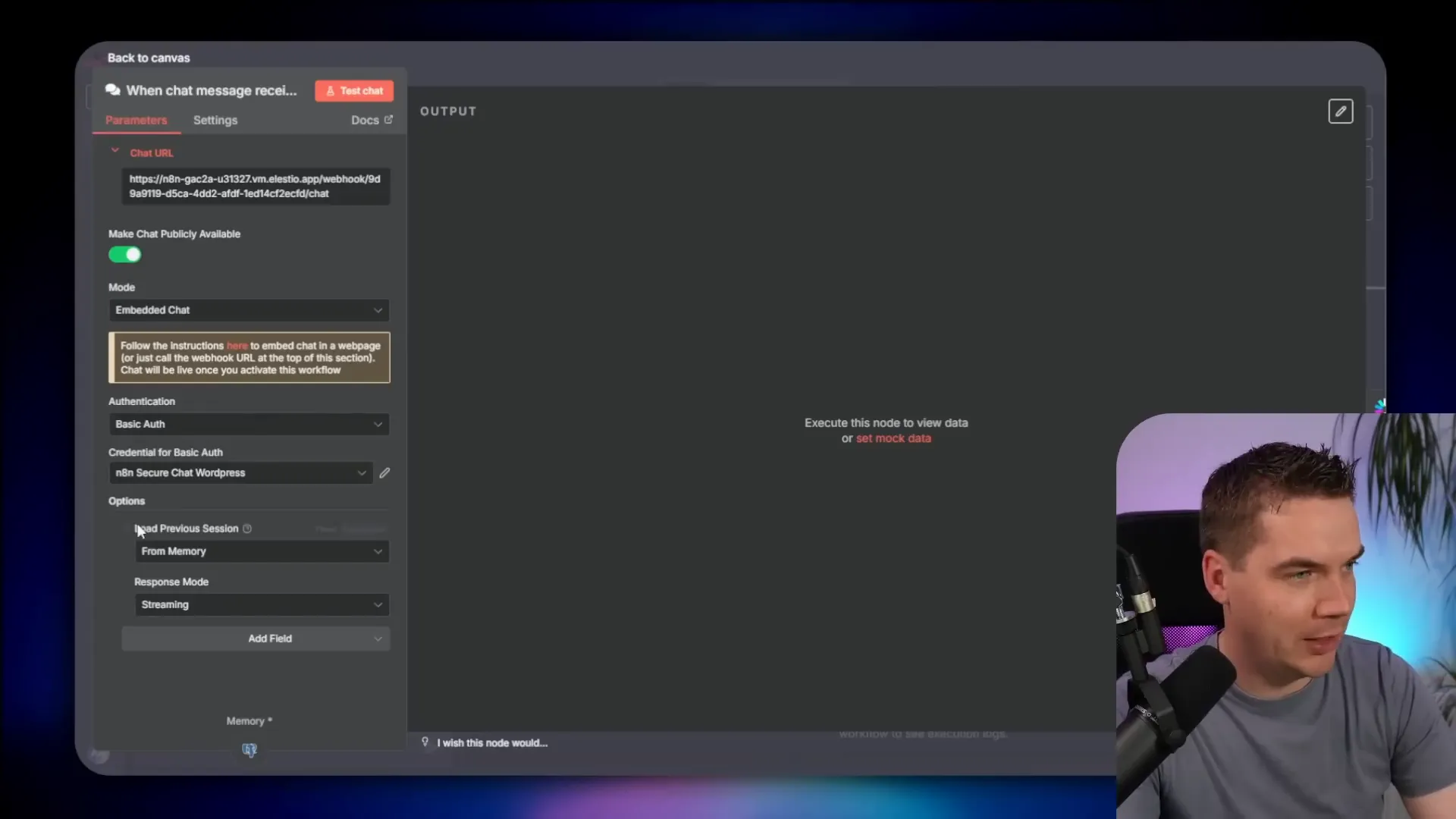

n8n provides a front-end chat embed that can be dropped into any page. That embed is convenient because it streams responses directly from n8n to the user’s browser. For public chatbots this is fine. For private, account-specific agents it is not.

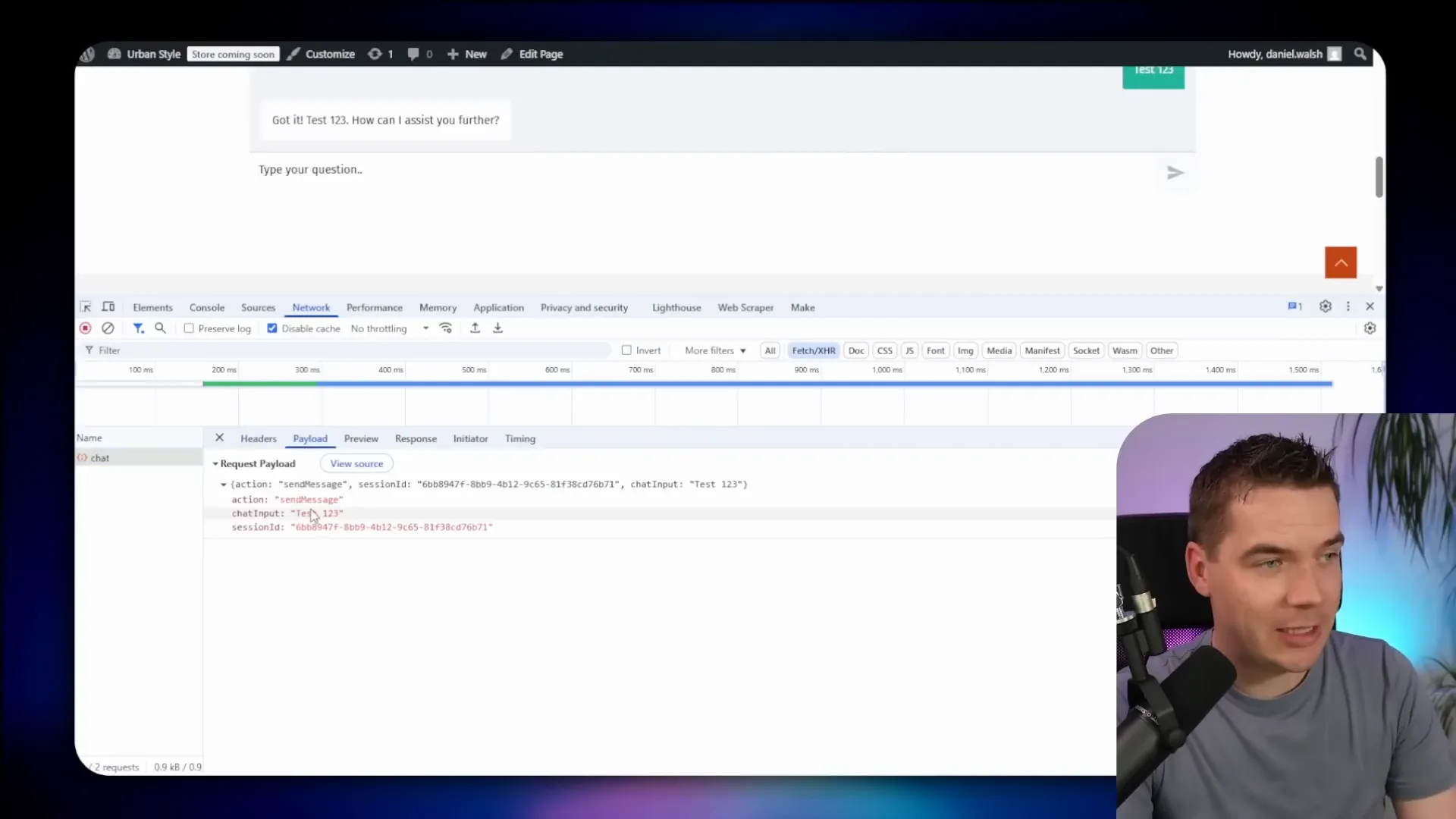

Why not? The browser sees the n8n webhook URL and any headers you include. A malicious user could open the browser dev tools, copy the webhook, modify headers like customer ID, and then make requests that extract other customers’ data.

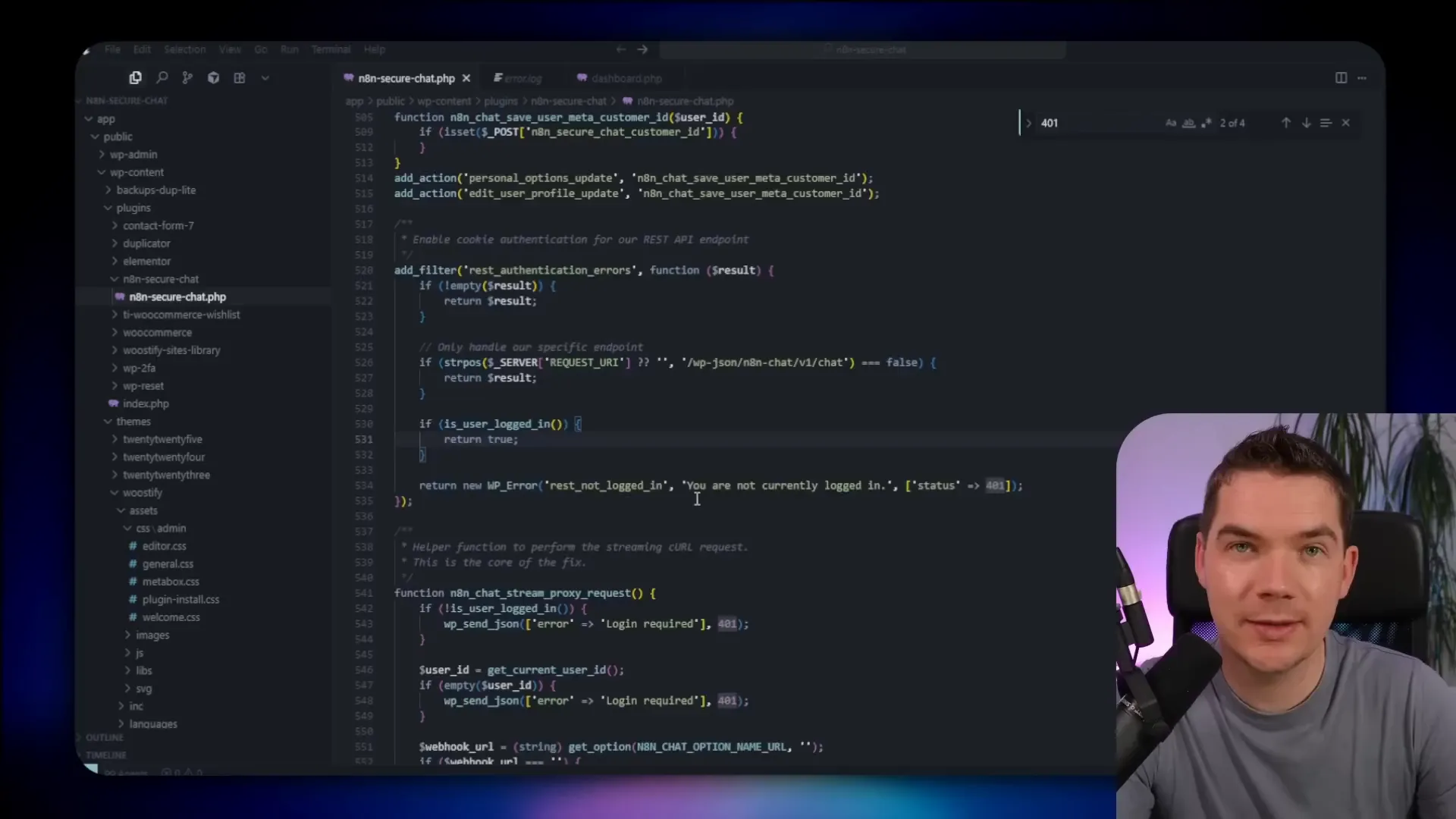

To prevent that, I created a proxy inside WordPress. The browser only talks to WordPress. WordPress authenticates the session, injects the correct customer ID, signs a JWT, and forwards the message to n8n. The user never sees the n8n webhook URL or any secrets. Everything between the browser and the WordPress server is TLS-encrypted.

The proxy also does one other important thing: it requires the user to be authenticated in WordPress. That connection between the logged-in user and a stored customer ID is what ensures the agent only fetches the correct records.

2. Verify request origin with short-lived JWTs

The proxy hides credentials, but you still need n8n to prove the request really came from your WordPress server. If an attacker gained Admin access to WordPress and copied the proxy endpoint details, they could still bypass some protections. To make replay or spoofing attacks harder, I generate a signed JWT on the WordPress side for each chat message.

The JWT contains minimal payload and expires quickly — in my setup it lived for 60 seconds. n8n verifies the JWT prior to doing any work. If verification fails or the token is expired, the message is rejected.

JWTs serve as origin verification here. They are not a substitute for good authentication, but they make it much harder for an attacker to replay or forge requests to n8n from outside your server.

3. Inject customer ID and filter all queries by it

Never accept a customer ID from the browser. Always inject it server-side after checking the user’s authenticated session. In my workflows I hard-code the customer ID parameter for fetch operations using the value that WordPress injects. The agent’s tools then call database queries that filter by that ID.

This pattern prevents prompt injection attacks where a user tries to trick the agent into returning other customers’ data. Even if the language model is coaxed to fetch different data, the application layer enforces the filter and prevents the leak.

For every tool that reads from the database or storage, I added explicit conditions that tie the requested record to the injected customer ID. That includes orders, order items, and document links.

4. Enable Row Level Security (RLS) on Supabase

Enabling RLS is one of the most important steps you can take. Supabase exposes a public anon key by design. If your tables are in the public schema and RLS is off, anyone can call the REST endpoints and fetch data with that anonymous key.

I tested this by temporarily disabling RLS. With RLS off I was able to hit the orders endpoint from Postman using the public anon key and receive every order in the database. That’s a catastrophic misconfiguration for private data.

Turn RLS back on and you close that hole. However, there’s an extra wrinkle: n8n’s Supabase integration often uses the service role key, which bypasses RLS. That means you still need to pay attention to which credentials n8n holds and how you use them. I’ll address least privilege next.

5. Apply the principle of least privilege

Don’t give any component more permissions than it needs. In the demo, n8n only needs read access to customers and orders and write access to the n8n chat history table. It does not need the “god” service role key.

A better approach is to create a database user with a minimal permission set and use that account in n8n credentials. You can grant SELECT on the necessary tables and INSERT on the chat history table. Avoid storing high-privilege keys in tools that are exposed to multiple staff accounts.

In many cases n8n’s native modules default to the service role key. Where possible, replace that with scoped credentials, or use a custom database function that enforces limits and can be called by n8n without exposing the full service key.

6. Use step-up (multi-factor) authentication for sensitive actions

Not every request needs extra verification. For low-risk queries like “When will my package arrive?” the normal session may be enough. For higher-risk operations, like showing sensitive documents or high-value financial details, I added step-up authentication.

Step-up authentication triggers an additional verification when the workflow hits certain actions. For example, if the user asks to view a proof of delivery and the session hasn’t completed the step-up, n8n fetches the user’s phone number and sends an SMS one-time code. The user must return that code to the chat interface. n8n verifies it and only then generates the signed document link.

This approach reduces the blast radius if the WordPress session or admin is compromised. A hacker who only has control of the WordPress backend would not have the user’s phone to receive the one-time code. It also gives a clear audit trail for sensitive operations.

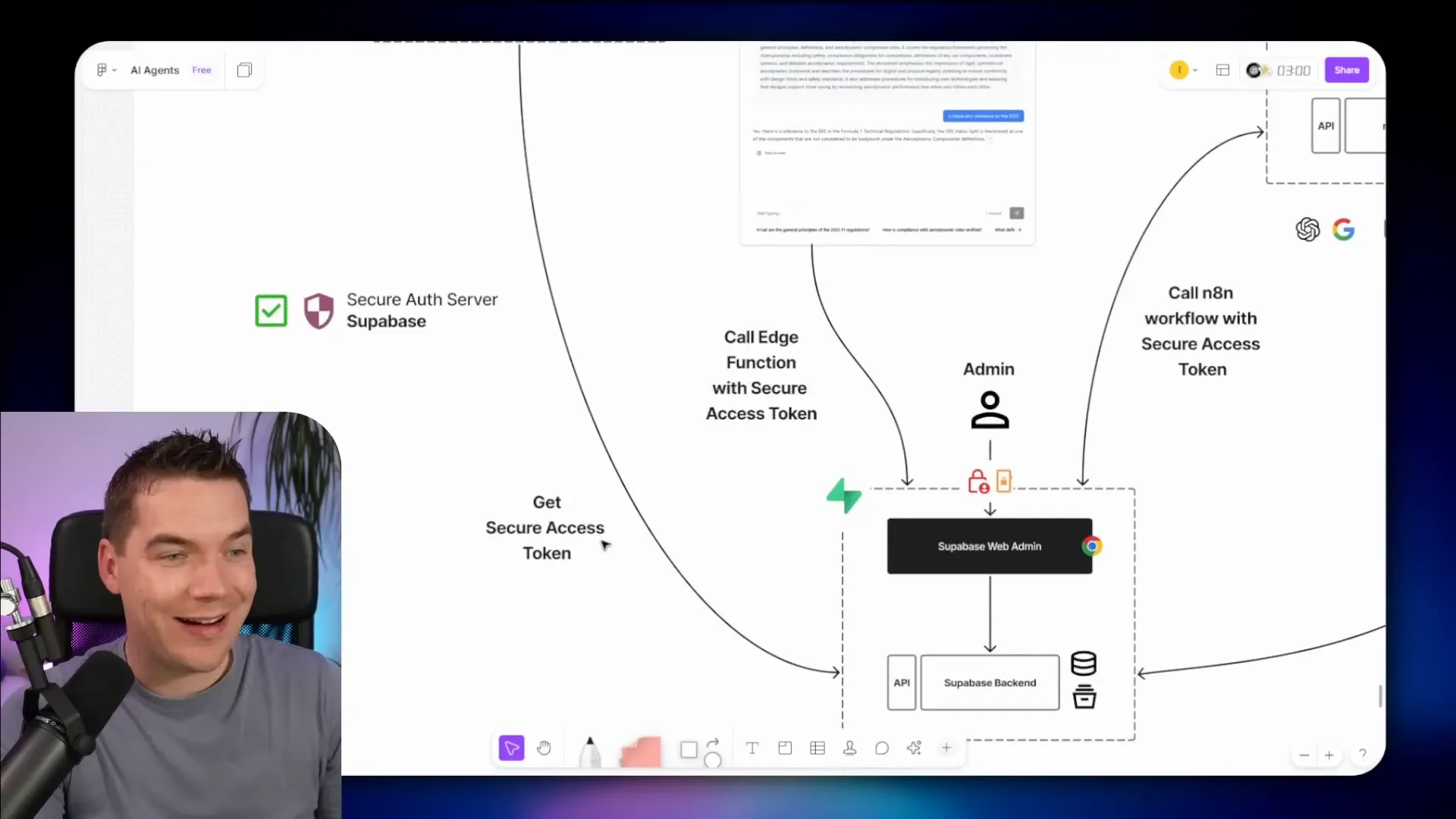

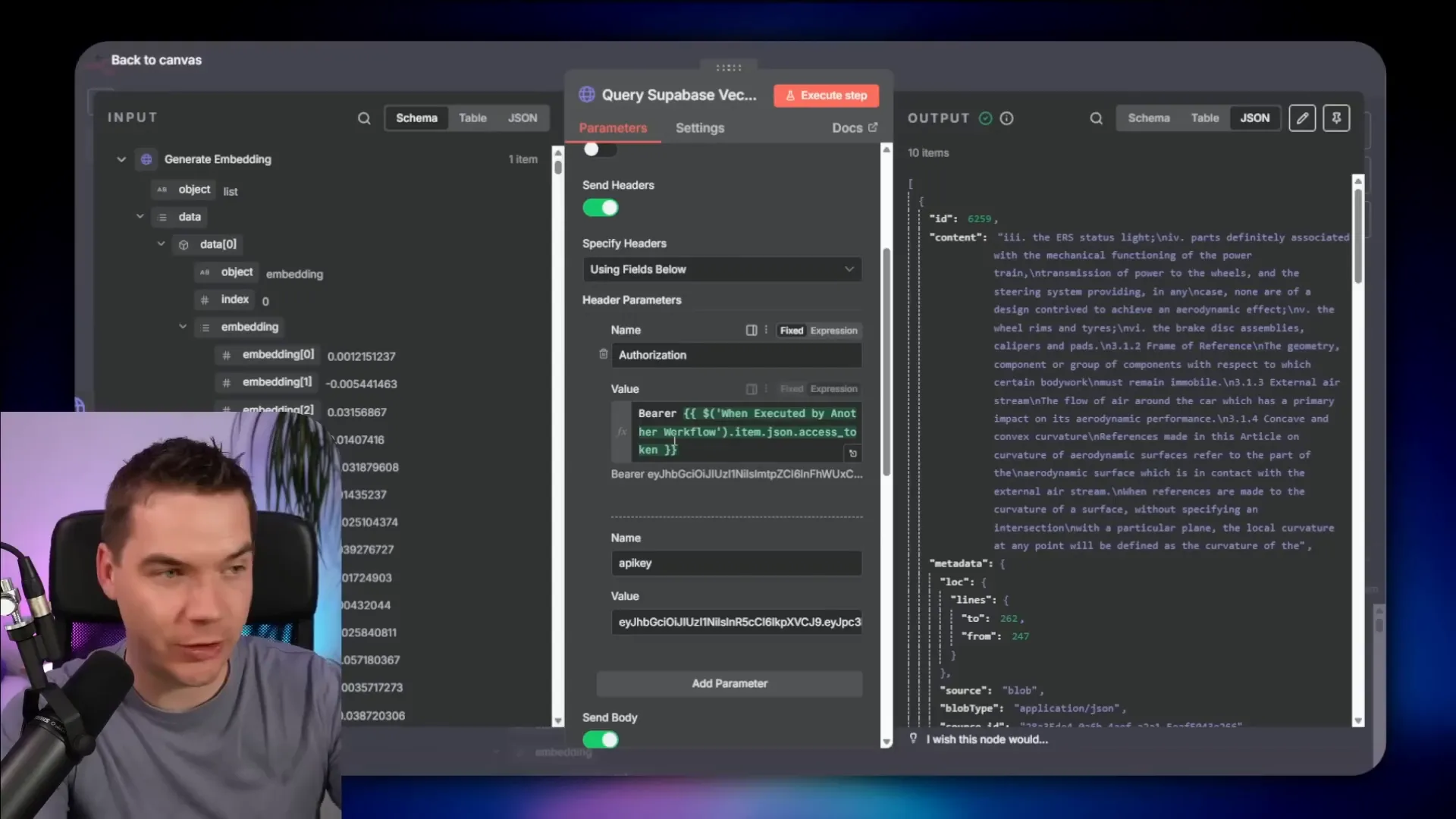

7. Enforce database-level access tokens and RLS for zero trust

Application-level security is necessary, but it’s fragile if any component is compromised. That’s why defense in depth matters. The most robust approach is to authenticate the user against a secure identity provider, produce a short-lived access token, and pass that token with each request to the database layer.

In practice I used Supabase auth for this pattern in a separate project. The flow is:

- User logs into a front-end app (React) via Supabase auth.

- Supabase returns a secure access token.

- Front end calls an edge function or API, passing the access token.

- The edge function verifies the token and calls n8n with that token attached.

- When n8n queries Supabase, it forwards the user’s access token so the database enforces RLS.

With this model, n8n never needs the service role key. Even if n8n were compromised, the attacker could only act as one user for a short time rather than having full admin access to all records. The database policies are the final gate.

Additional protections I used

These are smaller but important steps that complement the main seven strategies.

Use HTTPS everywhere

All communication between the browser, WordPress, n8n, Supabase, and any edge functions must be encrypted with TLS. That prevents simple interception of tokens, JWTs, or sensitive payloads.

Rate limiting

Apply rate limiting at the proxy and API layers. Rate limits slow down brute force or automated scraping attempts and give you time to detect anomalies.

Input validation and sanitization

Validate all inputs the user and admins provide. Block or sanitize any data that could be used in SQL injection, OS command injection, or template injection. Treat user-supplied content as untrusted by default.

Rotate keys and secrets

Regularly rotate basic auth credentials and shared JWT secrets. That reduces the window of exposure if a secret leaks. Ideally store keys in environment variables rather than editable UI fields in WordPress.

Logging and monitoring

Log important events across the architecture: admin logins, JWT verification failures, repeated failed step-up attempts, and unusual API patterns. Set alerts so you can react quickly to suspicious activity.

Edge network protections

Use a Web Application Firewall (WAF) and DDoS protections such as Cloudflare. These services help prevent automated attacks, bot scraping, and basic exploitation attempts at the perimeter.

Isolate internal systems behind a DMZ

Where possible, keep internal services behind firewalls or on private networks. Only expose what must be public. Use a DMZ to buffer external traffic, and allow backend services to live on separate networks.

How the agent uses tools safely inside n8n

n8n workflows act as the agent’s toolkit. I built tools for the agent such as:

- fetch customer record

- fetch orders

- fetch order details and items

- generate signed URLs for documents

- escalate issues into a support inbox

Each tool receives a customer ID injected by the proxy. The tool’s conditions enforce that ID. If a tool tries to query a record outside that ID, the query returns no results. That makes the tool itself resilient to prompt injection inside the LLM content.

Why streaming helps UX

I enabled streaming in the chat embed. Streaming keeps users engaged since they see the agent type responses in real time. It feels more human and encourages iteration in conversation. For higher concurrency, streaming also reduces perceived latency because users get a quick partial answer while further processing continues in the background.

Supabase storage patterns I used

Document assets such as invoices and proof-of-delivery photos are stored in Supabase storage buckets. I separated buckets into predictable folders keyed by customer ID so I can generate signed links scoped to the right record.

When the agent needs to show a document, I generate a signed URL with a short expiry. This signed URL is created server-side after the step-up checks and customer ID verification. The user then receives a one-time link to view or download the document.

Step-by-step: from front end to secure data fetch

Here’s the full flow for a typical secure request that touches a customer record:

- User is authenticated in WordPress or a separate front-end app.

- User asks a question in the chat widget on the dashboard.

- The browser sends the message to the WordPress proxy endpoint (never to n8n directly).

- WordPress confirms the user session and looks up the linked customer ID.

- WordPress generates a signed, short-lived JWT and injects the customer ID into the request payload.

- WordPress forwards the payload and JWT to the n8n workflow webhook.

- n8n verifies the JWT. If valid and unexpired, it decodes the customer ID and proceeds.

- The agent’s tools query Supabase, filtered by the customer ID. If the action needs a document, n8n generates a signed URL or calls an edge function that does so.

- If the action is sensitive and step-up is required, n8n initiates the verification flow (e.g., SMS code) before generating any documents or revealing detailed info.

- Once checks pass, n8n returns a response to WordPress and the proxy streams the final message back to the browser.

Handling the special case: n8n licensing and hosting

Licensing matters for commercial deployments. n8n has licensing tiers, including a sustainable use license for many internal use cases and enterprise options for commercial redistribution or white labeling. The line between “internal use” and “commercial use” can be blurry.

For example, if you embed n8n chat inside an e-commerce site to serve your customers, is that internal business usage? The license text is ambiguous on whether that model requires an enterprise license. I reached out to n8n licensing for clarification, but the boundaries were not fully clear at the time of my demo.

My practical approach: validate the licensing terms for your use case. If you plan to host n8n and expose it to the public or charge for a product whose core value derives from n8n, engage the vendor for explicit guidance.

Compliance and data protection considerations

When the agent processes personally identifiable information, you must think about regional data protection rules and contractual agreements.

Customer consent and privacy policies

Depending on jurisdictions such as the EU or certain US states, you may need explicit consent to process personal data with automated agents. Update your privacy policy and terms to reflect AI usage and data handling practices. Describe what data is processed, why, and how long it persists.

Data processing agreements (DPAs)

Your relationship with the LLM provider matters. Major providers expose data processing addendums that set rules for retention and training. Verify the provider’s policy on retention, logging, and whether user data will be used to improve their models. Prefer providers that offer zero retention or explicit contractual guarantees.

Data residency

If you operate under GDPR or similar regimes, you may have restrictions on where data can be processed geographically. Check that your third-party services comply with residency rules or that you have appropriate safeguards.

When to avoid sending sensitive data to an LLM

Never send payment card details or very sensitive identifiers to an LLM. Avoid social security numbers, bank account numbers, medical records, and other high-risk PII. If you must work with sensitive data, consider running a private model inside your network so data never leaves your control.

Data minimization and anonymization

One effective mitigation is to anonymize or tokenize PII before sending it to the LLM. Replace names, emails, and account numbers with reversible tokens. Send only the minimal context the model needs to answer the question. After the model returns the response, map tokens back to real values on the secure server side. This reduces exposure if provider logs are compromised.

Perfect anonymization is hard. Use the technique to reduce risk rather than as a single point of defense.

Operational hygiene: patching, updates, and staff practices

Security isn’t just architecture. It’s also how you operate the stack.

- Keep n8n, WordPress, Supabase, and system packages up to date.

- Use multi-factor authentication for admin access.

- Enforce strong password policies for staff accounts.

- Restrict admin privileges to a small group of trusted users.

- Monitor for security advisories and apply critical patches quickly.

I received an email warning of a security issue in an n8n release during my testing. That reminded me how quickly an out-of-date component can become a risk. Patch management must be part of any production deployment.

Putting the pieces together: secure vector search example

In a separate implementation I used Supabase vector search for content retrieval. The frontend obtained a Supabase access token on login. The access token was passed into an edge function that called an n8n workflow. The workflow generated an embedding and called a database function that performed a match. The database function accepted the user’s access token and enforced RLS.

As a result the entire search pipeline only returned documents the user could see. n8n didn’t need the service role key. If n8n were compromised an attacker could only access documents as that user for the token’s short lifetime.

Common pitfalls and how to avoid them

I want to call out common mistakes I saw while building this project and testing other people’s setups.

- Exposing service role keys in workflows: These keys bypass RLS and should never be used in public-facing tooling.

- Relying on browser-supplied customer IDs: The client is untrusted. Always inject IDs server-side after authentication.

- Using static tokens with long expiration: Short-lived tokens reduce replay risk. Rotate secrets often.

- Not having MFA for admin panels: Admins control the configuration and credentials. Protect those accounts.

- Ignoring infrastructure patching: Tools like n8n and WordPress receive security fixes. Apply them promptly.

How I tested data isolation

I created two test accounts. I logged in as one user and verified the agent returned only that user’s orders. I logged in as the second user and repeated the test. I then attempted to forge a request from the browser network tab by changing the customer ID header. The proxy blocked it. I tried requests to the Supabase REST endpoints with the anon key while RLS was disabled to show the risk. That proved why RLS must be on.

These tests gave me confidence that the layered protections worked together to prevent accidental or malicious data leaks.

What to expect complexity-wise for your team

This architecture is achievable but not trivial. Expect to spend time on:

- Implementing and testing the proxy and JWT signing.

- Designing RLS policies and testing database behavior under different tokens.

- Configuring least-privilege credentials and evaluating third-party integrations.

- Adding step-up flows and UX for one-time codes within the chat experience.

- Setting up logging, alerts, and incident response for suspicious patterns.

If your team lacks security experience, involve a security engineer for threat modeling and code review. The cost of getting this wrong is high.

Final technical checklist you can reuse

- Do not expose n8n webhooks directly to the browser for private agents.

- Proxy chat traffic through an authenticated server that injects customer IDs.

- Sign each proxied request with a short-lived JWT and verify it in n8n.

- Enable RLS on all relevant Supabase tables and storage buckets.

- Avoid using the Supabase service role key in external automations. Use scoped accounts.

- Require MFA for admin access to WordPress, n8n, and Supabase consoles.

- Use step-up authentication for actions that reveal sensitive documents or financial data.

- Minimize the PII you send to external LLM providers. Tokenize where possible.

- Keep all components patched and monitor security advisories.

- Log and alert on abnormal API patterns and repeated authentication failures.

Key trade-offs to consider

Security involves trade-offs. Each layer you add increases safety but also adds complexity and latency. For example:

- Generating short-lived tokens and verifying them adds a few extra API calls.

- Step-up authentication improves security but adds friction for the user.

- Tokenization reduces PII exposure but requires reversible token mapping and more server-side logic.

Design with risk in mind. For low-risk information you may accept lighter controls. For high-risk data, insist on stricter measures and database-enforced policies.

Licensing and hosting choices

Your choice of hosting and software license affects cost and legal obligations. If you self-host n8n, you must follow its license terms. If your usage is public-facing or you build a product that depends heavily on n8n, get clarity from the vendor. Similarly, pick LLM providers that support your privacy and residency requirements, or run models in-house if needed.

Where to focus first if you want to build something similar

If you’re building a proof-of-concept, focus on three things first:

- Make the front end call a secure proxy rather than n8n directly.

- Ensure the proxy adds a server-side customer ID and signs a short-lived token.

- Enable RLS in the database and create a minimal test policy so the public keys return nothing for protected tables.

Once those are in place, expand with step-up flows and least-privilege credentials. Finally, add monitoring and rotate keys.

Final operating notes

Maintain an incident response plan. Know who will act if an alert fires. Have a rotation cadence for secrets. Document RLS policies and review them regularly. Keep admin access tightly controlled and reviewed periodically.

Building a secure multi-user AI agent is an exercise in layered defenses, clear ownership, and operational discipline. The reward is a self-service feature customers can trust and a reduction in repetitive support load. Security isn’t a single setting. It’s a set of practices you must repeat and validate continuously.