NotebookLM stands out as one of the most powerful AI research tools available today. What makes it remarkable is its ability to ground responses exclusively in the sources you provide, ensuring accuracy and relevance. However, its closed nature limits customization and self-hosting, which can be a barrier for businesses wanting a tailored AI research assistant. Over three days, I created a clone of NotebookLM called InsightsLM that overcomes these limitations. This tool is private, self-hosted, and customizable to fit specific business needs—all without writing a single line of code.

Using Loveable to build the front end and integrating Supabase and n8n as the backend, I developed a fully functional app that replicates and extends the core features of NotebookLM. The best part is that InsightsLM is open source, allowing anyone to install, customize, improve, and even sell it. This article walks you through the features, architecture, and setup process of InsightsLM, illustrating how AI-powered research tools can be adapted and controlled by businesses for their own data.

Introducing InsightsLM: A Powerful AI Research Tool

InsightsLM is built as a Retrieval-Augmented Generation (RAG) system, which means it combines AI generation with retrieval of information from a company’s own knowledge base. This approach ensures that AI responses are grounded in verified data, reducing the risk of hallucinations or incorrect answers. NotebookLM is probably the best RAG application available today, and InsightsLM aims to replicate and extend that functionality in an open, customizable format.

The app supports uploading documents, web scraping, and even handling audio files like MP3s. It allows users to chat with the AI about those uploaded sources, jump directly to citations within documents for verification, and generate podcasts based on the content—a feature inspired by NotebookLM’s podcast generation capability.

Demo: How InsightsLM Works

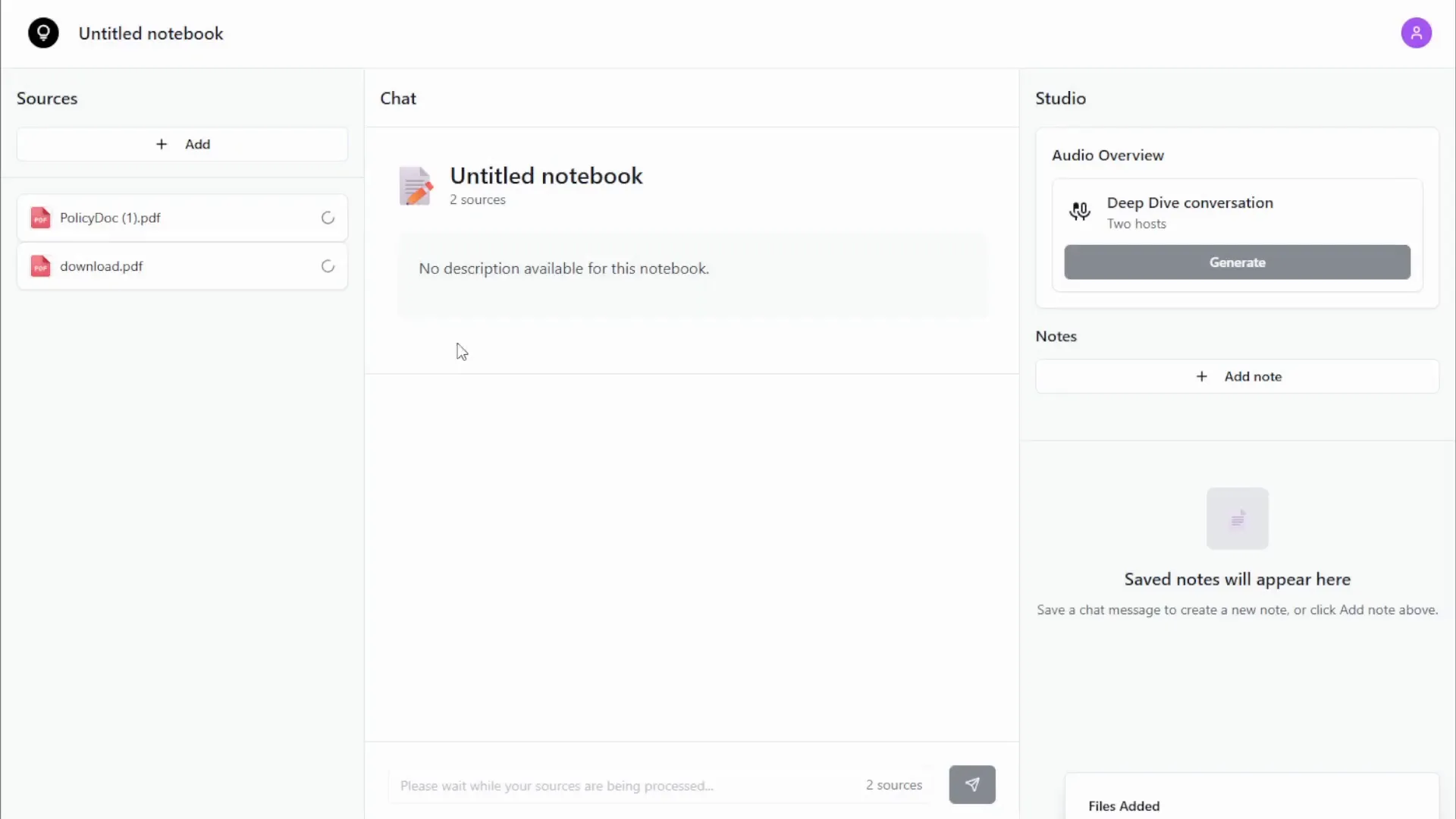

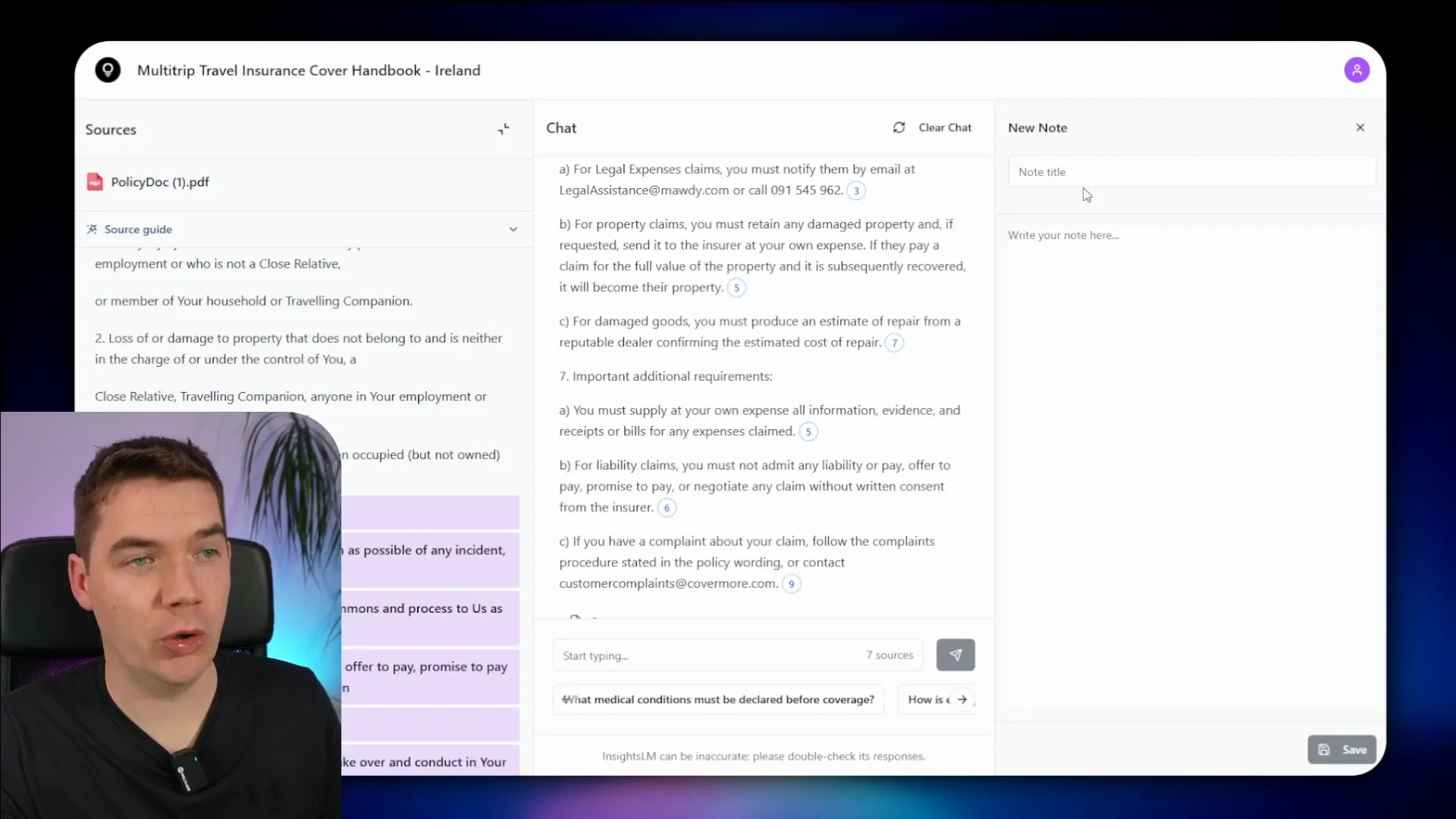

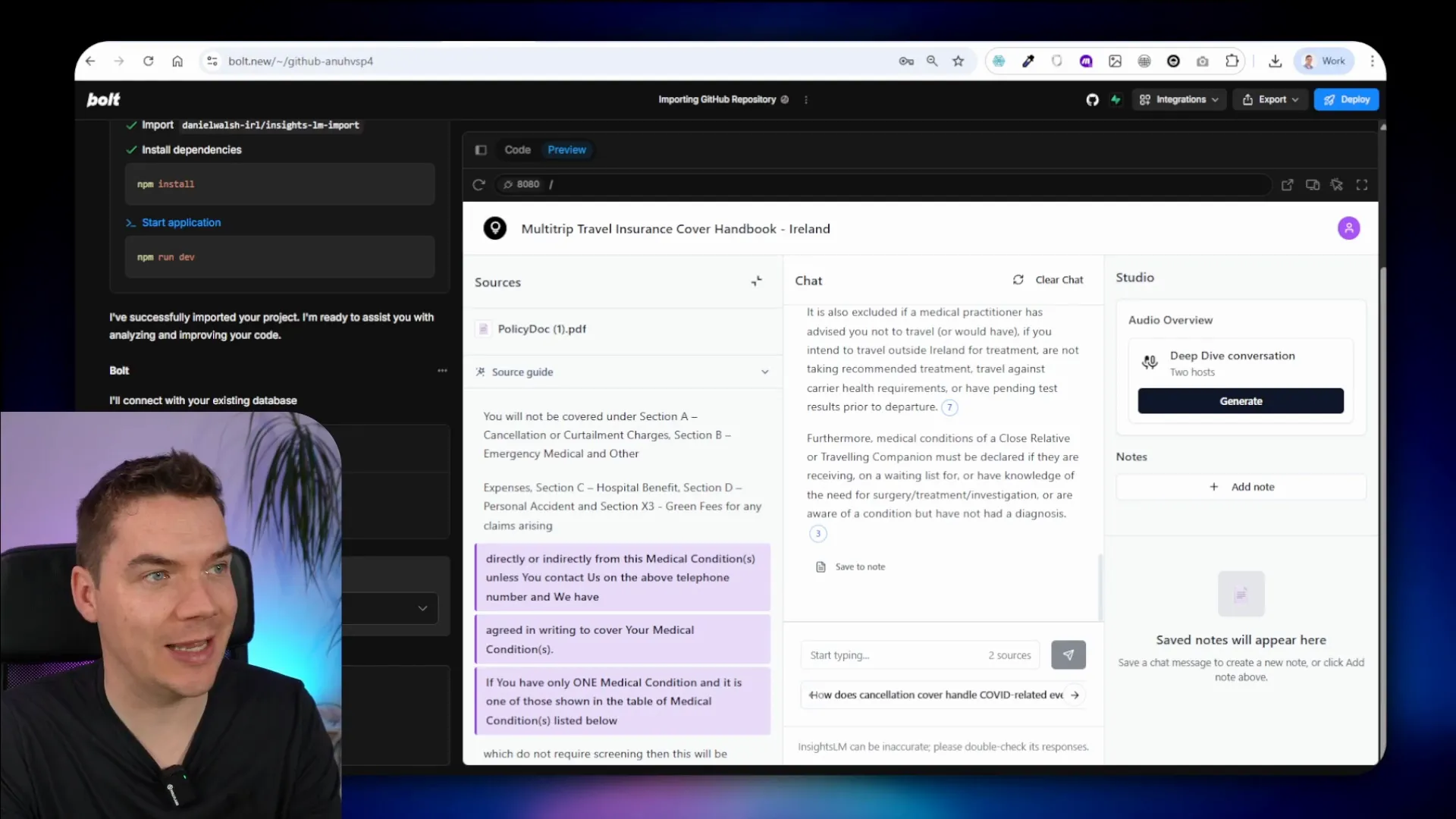

Upon logging in, users land on a dashboard where they can create notebooks. Each notebook can be grounded in documents uploaded by the user, URLs for web scraping, or audio files like MP3s. For example, I demonstrated the use case of a travel insurance company. I downloaded their policy documents and scraped relevant web pages to build a knowledge base.

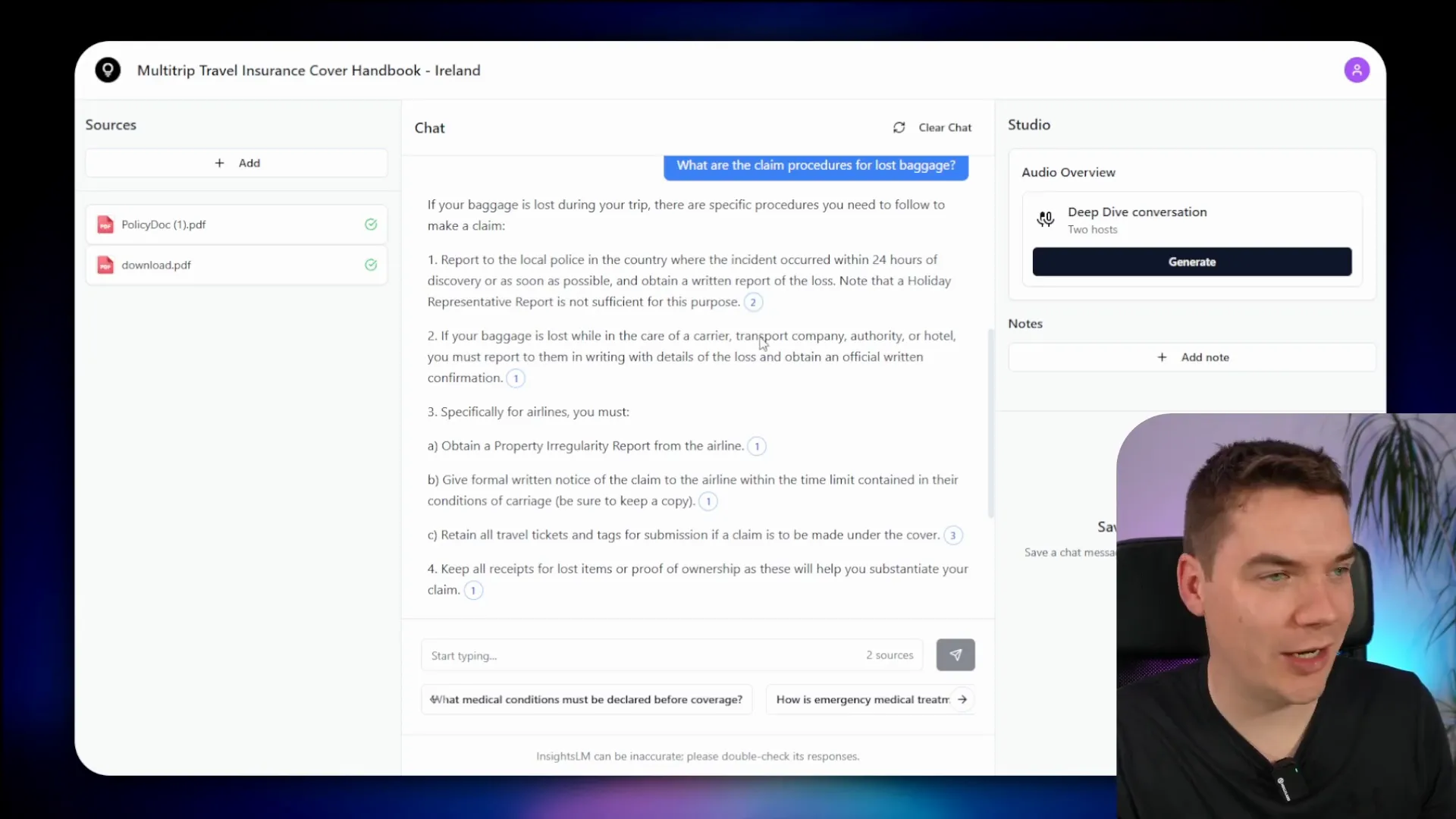

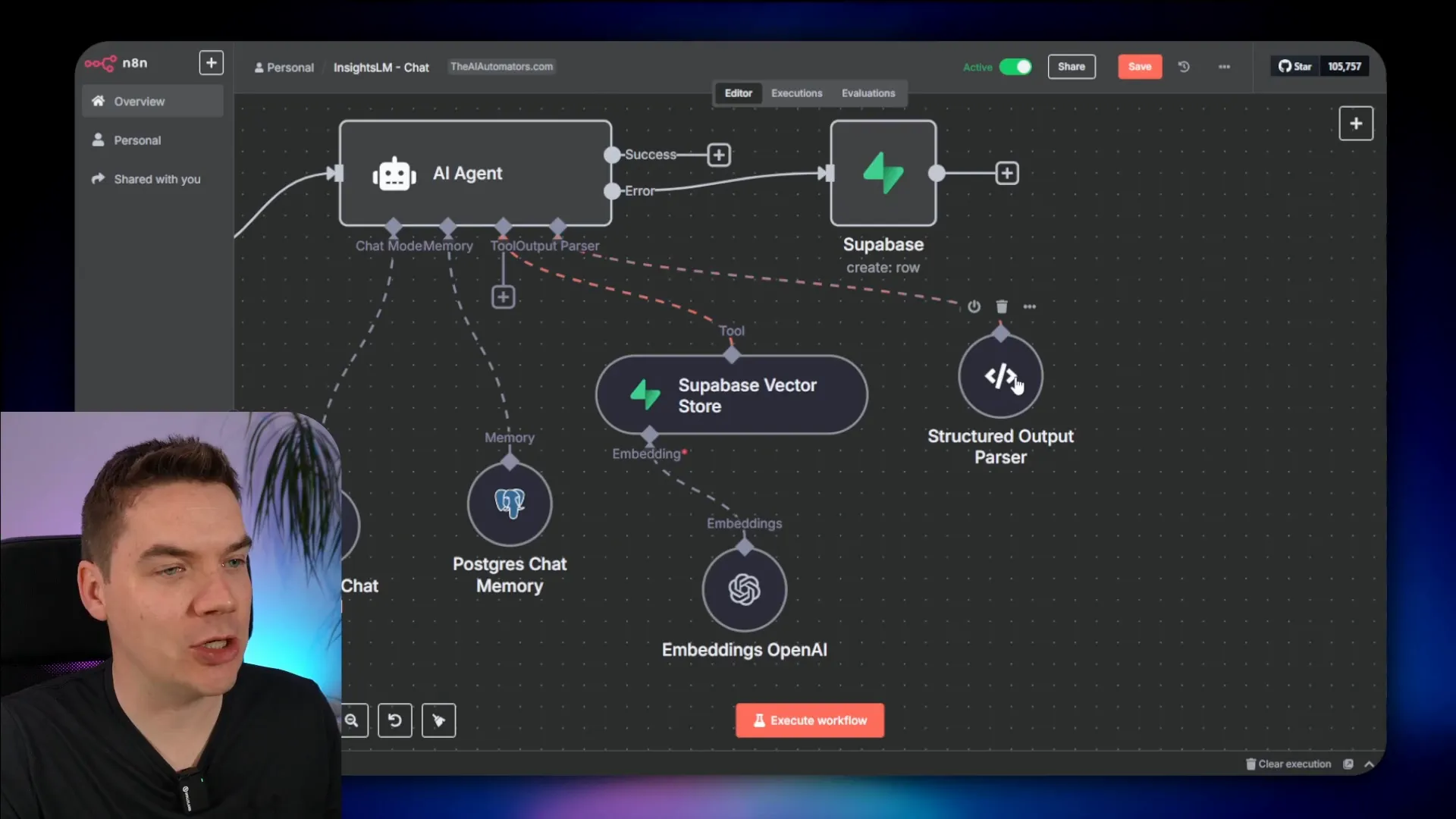

After uploading the policy PDFs, the app processes them using n8n workflows. These workflows index the content into a vector store, generate a notebook title and description, and create example prompts or questions to guide user interaction. When a question such as “What are the claim procedures for lost baggage?” is asked, the AI agent queries the vector store and generates a grounded response with inline citations.

The citations link to the exact section of the source document, allowing users to verify the accuracy of the AI’s answer. For instance, the system might highlight that the user must report lost baggage to the local police within 24 hours, directly referencing the policy document.

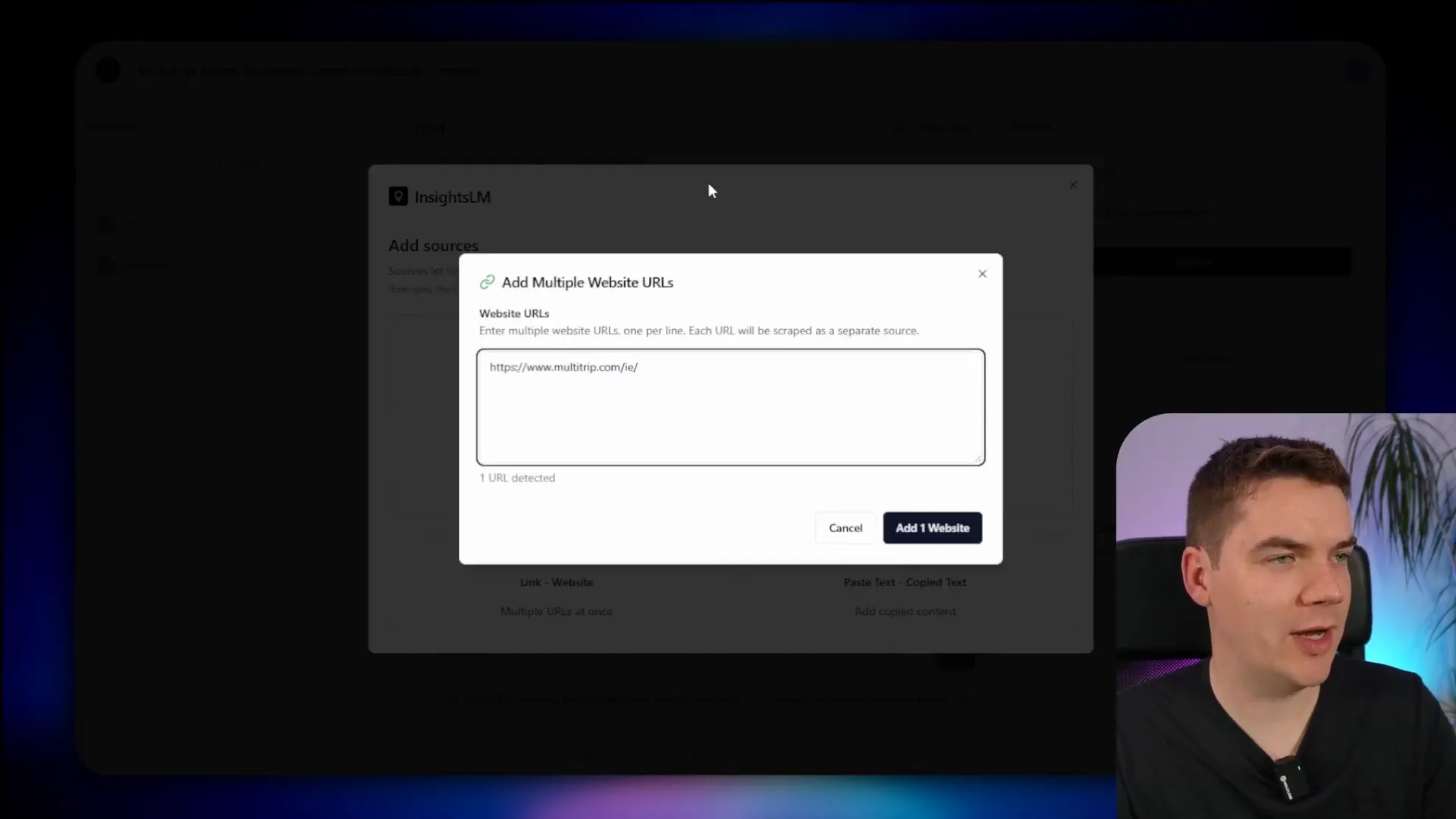

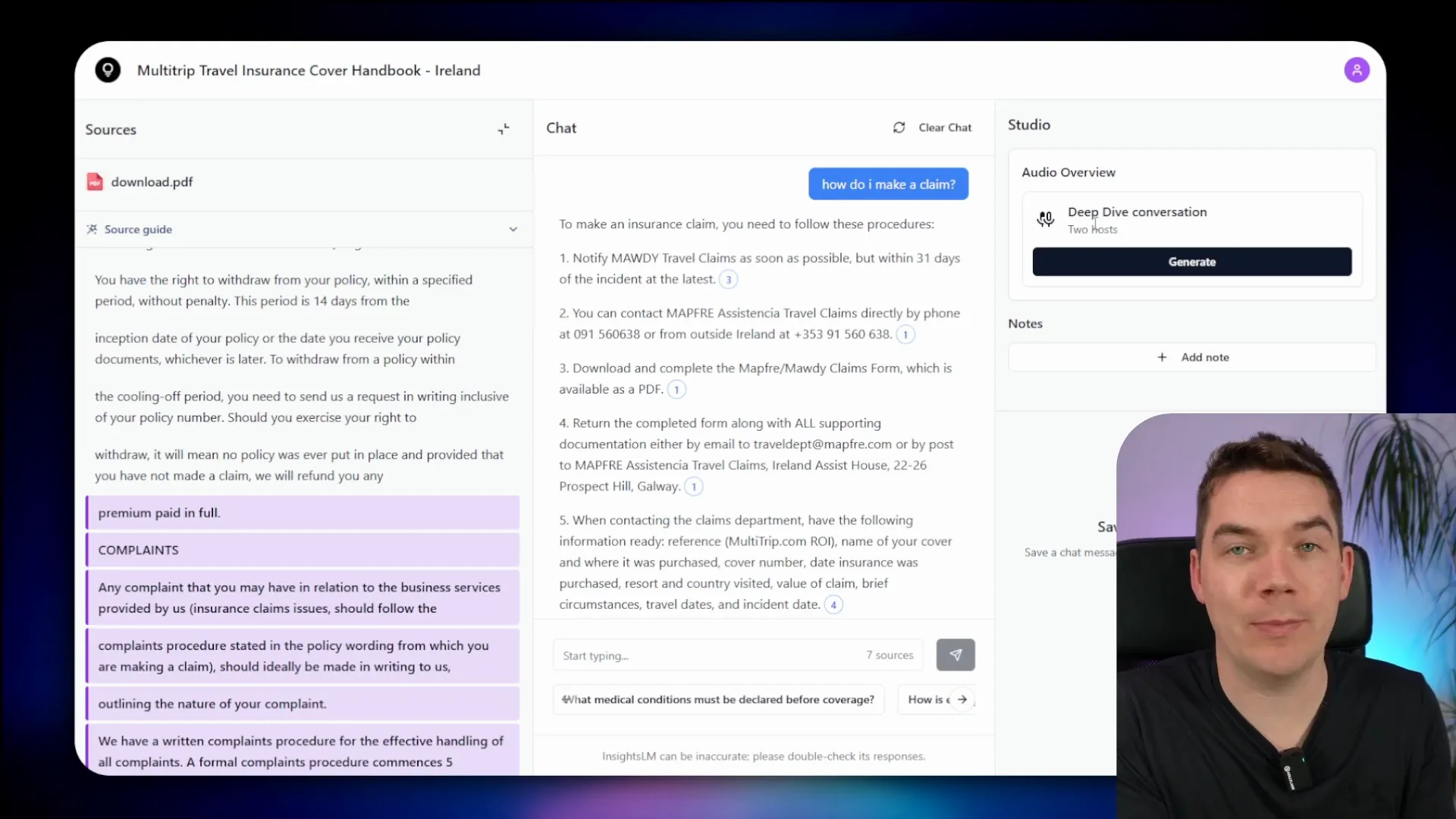

Adding web URLs as sources works similarly. I added several URLs from the company’s website, including FAQs and contact pages. These pages were scraped, processed, and chunked into the vector store. When I asked, “How do I make a claim?” the AI responded based on a combination of the policy document and the scraped web content, again with citations for easy verification.

One of the standout features is the podcast generation functionality. All sources in a notebook are sent to an AI model, which generates a podcast script. Two hosts then “discuss” the content in a podcast format, and the audio is synthesized using Google Gemini’s text-to-speech capabilities. This makes content consumption more engaging and accessible.

Additionally, users can save notes from AI responses or create their own notes, keeping important insights easily accessible. The saved notes maintain citation links, ensuring continued traceability of information.

Architecture: How InsightsLM Is Built

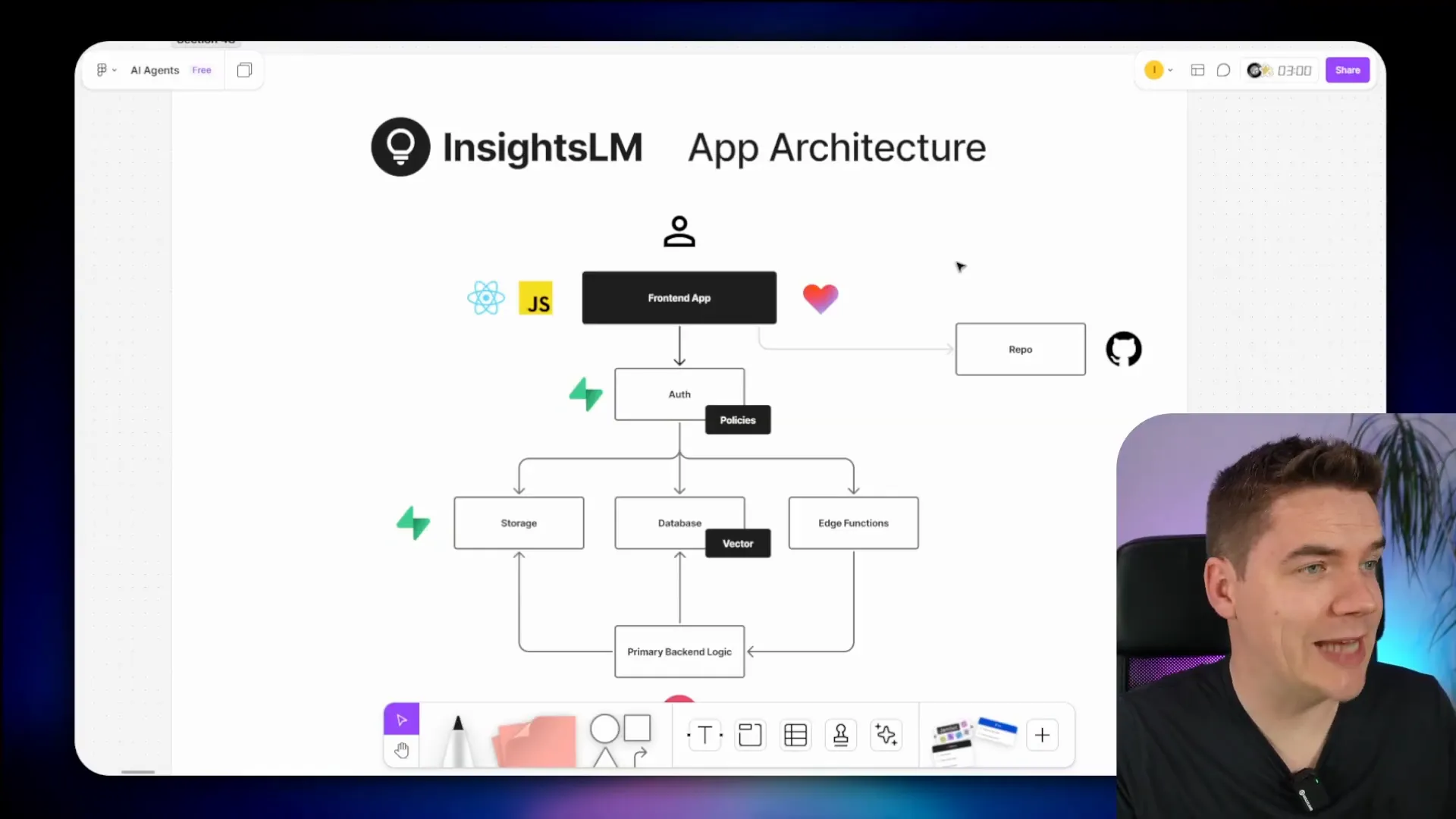

InsightsLM consists of a modern front end built with Loveable, a JavaScript app using the React framework. The user authenticates through Supabase, which ensures that users only access their own data through carefully set policies. Notebooks, sources, and documents are saved in the Supabase database, while files like PDFs are stored in Supabase storage buckets.

When a user uploads a source, Supabase Edge Functions trigger n8n workflows to extract text, generate notebook titles and descriptions, chunk documents, and insert data into the vector store. This layered approach allows complex processing while maintaining a smooth user experience.

Though it’s possible to build the entire system using Supabase Edge Functions, n8n simplifies workflow management and accelerates development. For example, text extraction is handled by a dedicated n8n workflow that downloads files, extracts text, and returns the content for further processing.

The chat interface communicates with an AI agent workflow in n8n that queries the vector store and generates responses. This agent uses a structured output parser, instructing the AI to return JSON-formatted responses with exact citations referencing document chunks. The system even tracks line numbers within source documents to highlight relevant text on the front end.

Other workflows handle processing additional sources, such as raw text or multiple website URLs. Web scraping is done using Jina.ai to fetch markdown content, which is then saved and chunked for semantic search.

The podcast generation workflow aggregates sources, prompts an LLM to create a script for two hosts, and sends the script to the Gemini API for audio synthesis. The raw audio file is converted to MP3 using FFmpeg, then uploaded to storage and linked to the notebook for playback.

To keep the front end secure, it never directly triggers n8n workflows. Instead, Supabase Edge Functions act as secure wrappers, managing authentication and workflow execution. Some logic, like generating a note title, is implemented directly in Edge Functions when simple AI responses are needed.

Database Design

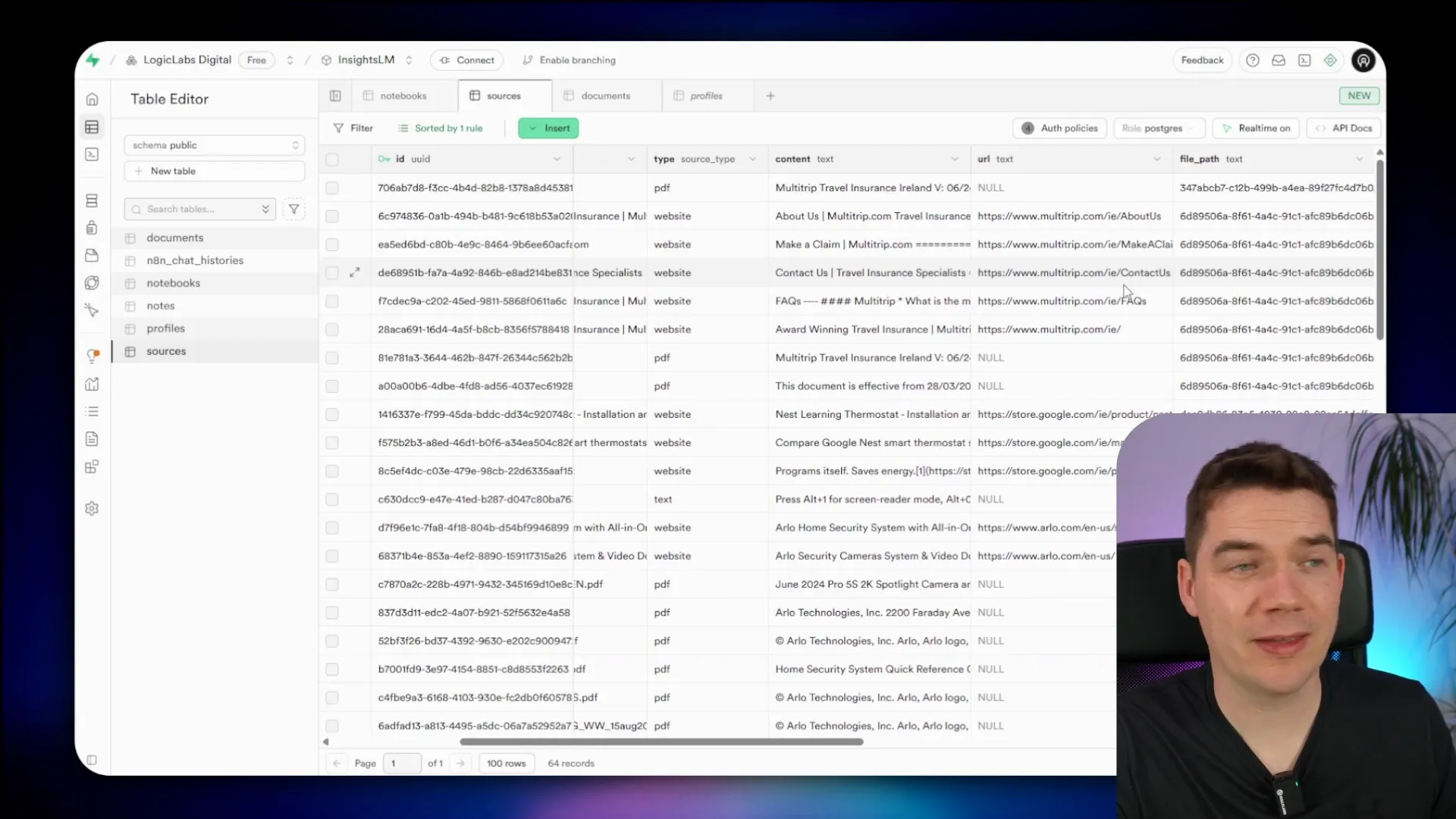

The database uses Supabase authentication to manage users, with a profiles table linked to user IDs for storing additional user information, such as profile images or LinkedIn URLs. Each user owns multiple notebooks, which in turn contain multiple sources.

Sources store metadata including titles, descriptions, file paths, and content. Documents are chunked into 1,000-character segments, each with embedding vectors for semantic search. Metadata tracks line numbers and IDs to maintain context.

Notes are stored separately and can be either AI-generated responses saved by users or custom user notes. Storage buckets are organized for sources, audio files, and public assets like images.

Building InsightsLM: What I Learned

I did not have a fully detailed plan at the start. Instead, I had a general idea of how the components would fit together, and I fleshed out the details during development. This approach allowed me to adapt and optimize the architecture as I progressed.

While AI-assisted coding speeds up development, it’s critical to review every change carefully. The AI might suggest redundant features or unnecessary complexity, so active involvement in the design and architecture is essential for a smooth build.

Setting Up InsightsLM: A Step-by-Step Guide

InsightsLM was built on Loveable, but importing projects with Supabase integration into Loveable currently faces limitations. The platform does not support direct importing or linking to external GitHub repositories, and community templates also don’t support Supabase.

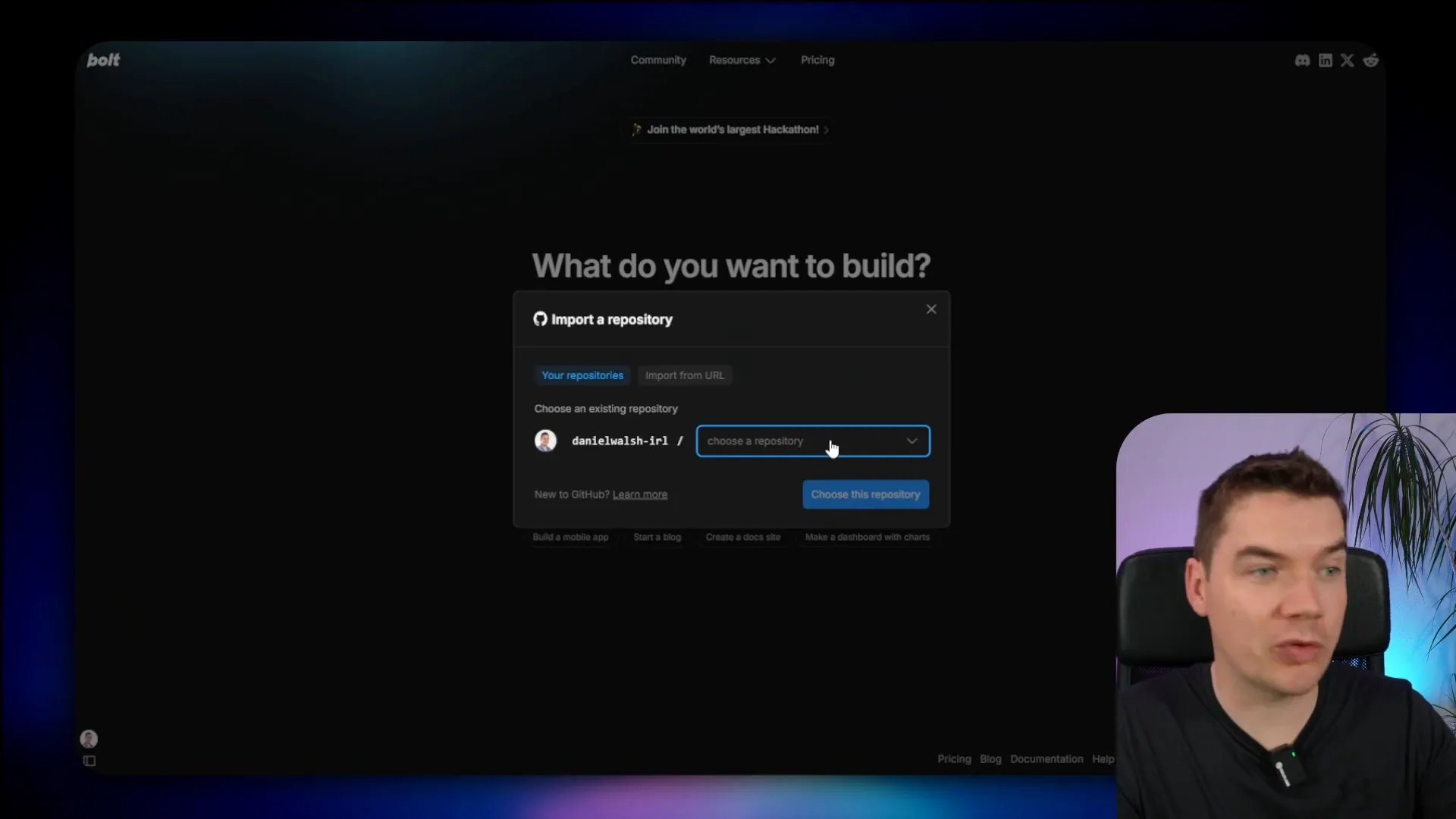

On the other hand, Bolt.new offers excellent GitHub import functionality and seamless Supabase integration. It automatically deploys database schemas and Edge Functions, making setup much easier.

Here’s the setup process I followed:

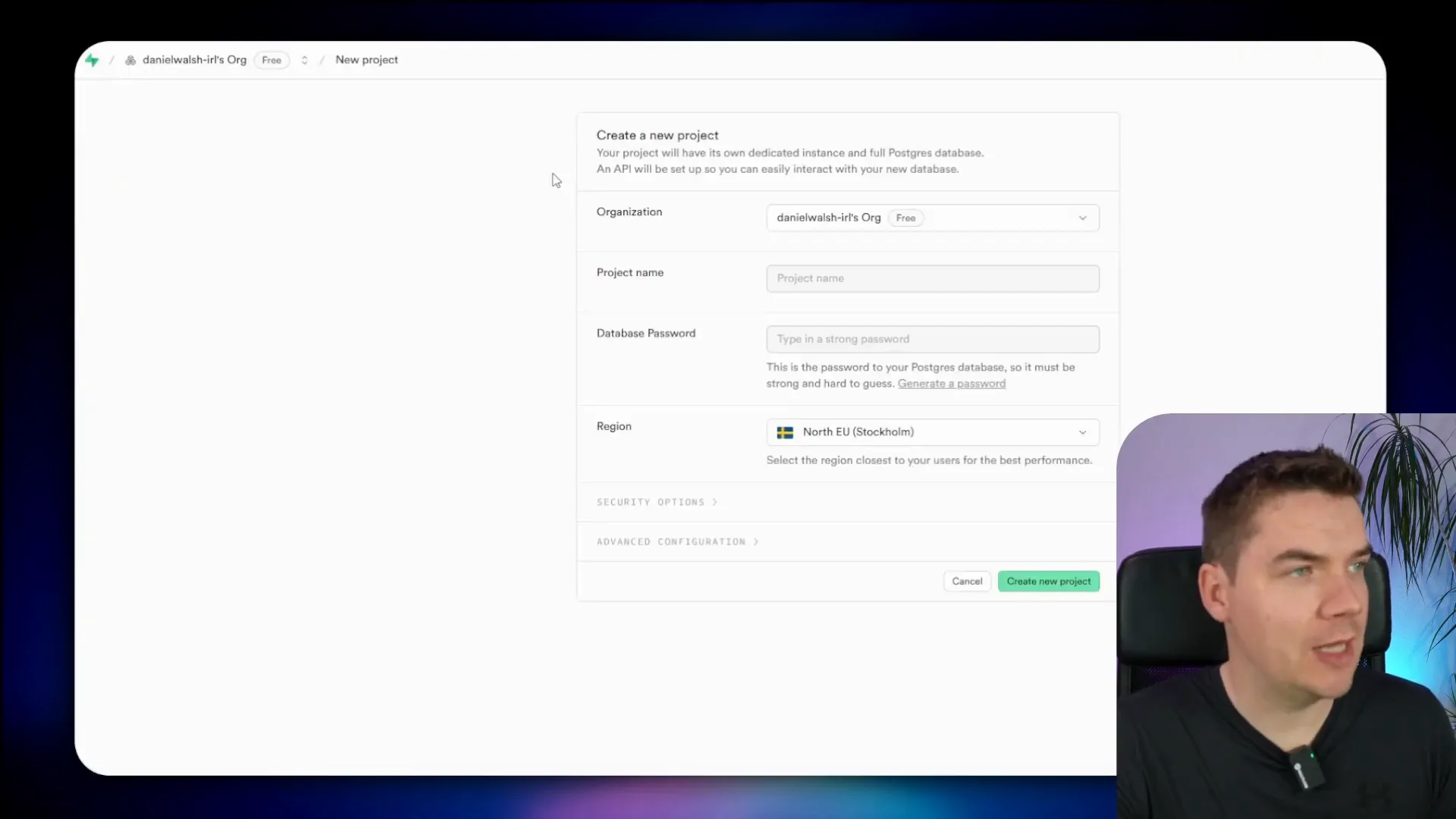

- Create a Supabase account and project: Go to supabase.com, create an account, and start a new project. Generate a database password and save it securely.

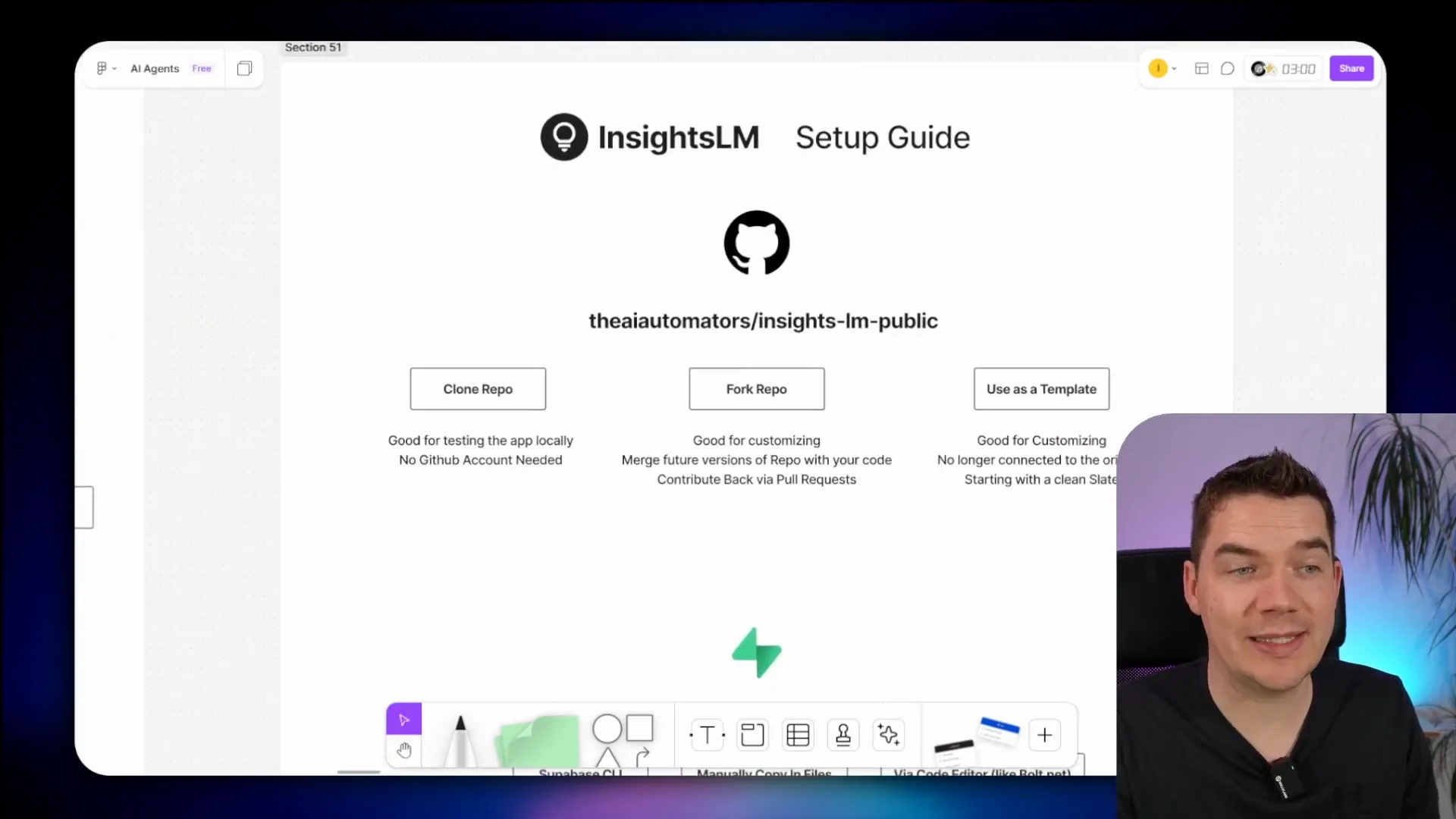

- Fork the InsightsLM GitHub repo: Visit the public repo and use the “Use this template” button to create a copy under your own GitHub account. This allows you to customize and control your version of the app.

- Import the project into Bolt.new: Log into bolt.new, select your forked repo, and import it. Connect Bolt to your Supabase project by authorizing API access and selecting your project.

- Deploy Supabase migrations: Bolt automatically applies database schema, policies, storage buckets, functions, and triggers via migration scripts.

- Import and configure n8n workflows: Download the JSON workflow files from the repo’s n8n folder or use the provided import workflow to automate this step. Configure credentials for Supabase, OpenAI, Google Gemini, and webhook authorization within n8n.

- Update Supabase secrets: Add webhook URLs, authorization keys, and OpenAI API keys to Supabase Edge Functions secrets to enable communication between components.

- Activate n8n workflows: Ensure all workflows except the text extraction one are activated to handle triggers properly.

- Test the app: Add users in Supabase authentication, log into the app, create notebooks, upload sources, and test AI chat and podcast generation features.

During setup, you’ll need to save various credentials and IDs in a notepad for easy reference. These include your Supabase project ID, n8n credential IDs, webhook authorization key, Google Gemini API key, OpenAI API key, and your n8n instance base URL.

FFmpeg must be installed on your n8n server to convert raw audio files into MP3 format for podcast playback. This step depends on your n8n hosting environment and is crucial for the audio generation feature.

Using and Customizing InsightsLM

Once set up, you can log into the app and start creating notebooks. Upload PDFs or add website URLs to build your knowledge base. Ask questions in natural language and get AI responses with citations. Save useful responses as notes and generate podcasts for a fresh way to engage with content.

Different large language models (LLMs) behave differently with the citation feature, which is built using prompt engineering. I found that Google Gemini 2.5 Flash and Claude 3.7 performed well, but it’s worth testing multiple models to find the best fit for your use case.

You can customize the app’s frontend in Bolt.new using vibe coding. This lets you change logos, add features, or adjust the interface. Changes sync back to your GitHub repository, but managing updates and merging conflicts requires attention to version control best practices.

Open Source and Licensing Considerations

InsightsLM is open source, allowing users to use, customize, and even commercialize the app. Supabase is also open source, but n8n uses a sustainable use license. This means that n8n’s platform can be used for free for internal business purposes, such as deploying InsightsLM locally within a company.

If you plan to offer InsightsLM as a SaaS product, you may need an n8n enterprise license. You should clarify your specific use case with n8n or legal advisors to ensure compliance. Alternatively, you could convert n8n workflows into Supabase Edge Functions to avoid n8n licensing restrictions.

Alternative Setup Options

You can clone the InsightsLM repository to test locally or fork it to customize and potentially contribute improvements back to the main project. If you don’t use Bolt.new, you can deploy Supabase components manually using the Supabase CLI or by copying Edge Functions and SQL migration scripts.

Each approach has its trade-offs, but the open source nature of InsightsLM encourages experimentation and collaboration.

Summary of InsightsLM’s Capabilities

- Upload and chat with various document types including PDFs, web pages, and audio files.

- AI responses grounded in company data with inline citations linked directly to source material.

- Podcast generation that transforms notebook content into engaging audio conversations.

- Secure multi-user support with authentication and data isolation via Supabase policies.

- Workflow automation using n8n for text extraction, vector store management, AI chat, and audio generation.

- Open source, self-hosted, and customizable, allowing businesses to adapt the tool to their needs.

- Integration with modern tools like Loveable for frontend development and Bolt.new for deployment and management.

InsightsLM demonstrates how combining existing technologies and AI models can recreate and expand on commercial tools like NotebookLM. By open sourcing the project, I’ve made it possible for businesses and developers to take control of their AI research assistants, ensuring privacy, accuracy, and flexibility.